As games become larger and more complex, developers are constantly looking to deliver the biggest worlds possible. Naturally things can get a little tedious when everything has to be crafted by hand and procedural generation may not always be the answer. Enter Artomatix , a physically based rendering (PBR) texture suite that streamlines a lot of an artists’ workload. Besides automated texture generation and growth, Artomatix specializes in seam removal, gradient texture removal and more.

GamingBolt spoke to Artomatix CTO Eric Risser about the software and how it can help game developers among other things.

"Two very exciting features that Artomatix is working on are style transfer on 3D assets and 3D hybridization."

Artomatix sounds like a dream come true for video game developers. Can you explain what it is and how it works?

AAA games studios spend a lot of time (and money) building big virtual worlds. Some parts of building these worlds is fun and highly creative, but other parts can become really tedious and time consuming, such as removing seams in materials. Artomatix is a solution which automates these tedious and not very creative tasks. We accomplish these using neural networks, statistics and a whole lot of passion for the video game industry!

Examples of use cases we cover today:

- Removing seams on organic or structured PBR materials;

- Texturing big assets or open 3D worlds without obvious repeats in Unity or Unreal through a dedicated offering called Infinity Tiles;

- Mending your 3D scan through our proprietary texture mutation or in-filling features.

Two very exciting features that Artomatix is working on are style transfer on 3D assets and 3D hybridization. Style transfer applied to 3D assets will enable studios to recycle a lot of their old assets or to fix style mismatch they get from their outsourcers, and 3D artists around the world to use assets from repositories on a more frequent basis. As for 3D hybridization, well, it allows people to generate infinity variations of untextured and textured meshes, which we believe will play a key role in AI-powered creation of 3D worlds… which we believe is the future.

Our current offering is cloud-based and runs in a web browser, due to the computationally-heavy nature of our tech. We’re working on an installable version of Artomatix, which will run on people’s computer and will need a minimal access to the Internet to handle the heavy processing. This version will handle batch processing of assets as well as many more use cases that we couldn’t get working on a browser due to its limitations.

What differentiates the AI-driven building process of Artomatix from something like procedural generation?

If I were to put it in one word: Automation. Procedural generation is the process of making art through writing code. Once you write the code to make a specific art asset, you can make a lot of those assets with various randomized characteristics (which you program into the procedure), but there’s no “free lunch” in this way of doing things. You the artist are still doing a lot of work and spending a lot of time to author these procedures. In contrast our AI-driven process actually does all the work for you.

That’s the difference from a user’s perspective. From an engineer’s perspective it’s technically a completely different and unrelated thing. Under the hood our approach makes heavy use of statistics based machine learning approaches fused with neural networks that drive the creative process. Essentially we train a neural network on millions of images until it starts recognizing and understanding the atomic components that make up images and how those atomic components fit together.

This is essentially the same learning phase that infants go through as they learn to parse the world. From here we can use this now trained neural network as a way of actually making images. The explanation of how this works is a bit technical for this interview, but at a high level the neural network is shown a texture or material and then it imagines new ones that share the same characteristics. On top of that the entire process is guided and controlled by statistical machine learning which directs the process towards some desirable user defined characteristics.

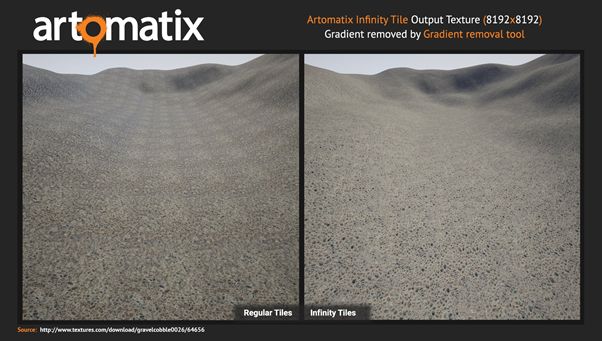

How does the Infinity Tile technology help to provide freshness to the art? What are the minimum required art assets that Artomatix needs to really be viable?

An Infinity Tile is essentially a single ‘smart’ texture that internally consists of 16 sub-textures that all fit together, interchangeably. An algorithm is then applied to the tile (which comes packaged in our Unity and Unreal plugins) which will shuffle the UV space of the Infinity Tile, causing the 16 sub-tiles to be constantly re-arranged, thereby creating an infinite non-repeating pattern. This is a fusion of our AI driven art creation, which generates the 16 tiles, and a procedural approach which re-arranges them automatically.

"Our approach doesn’t see pixels the way humans see pixels, as colors, normals and displacements… rather our technology just “super pixels” containing all of this information at once, so when it builds new materials it does so taking all information into account."

Infinity Tiles allow Environment Artists to add texture to huge stretches of terrain without worrying about weird patterns or artefacts emerging. Considering Environment Artists have already developed work-around solutions for this problem, Infinity Tiles can be used to enhance these solutions, effectively eliminating tiling artefacts for good!

Considering Infinity Tiles simply re-arrange a textures’ features in a smart way, you get out what you put in! Of course, you can simply use the rest of the Artomatix suite to improve the quality of your textures before you use them as an Infinity Tile input.

Which game engines currently support Artomatix and are you working with other developers to support their technology?

You can jump in and start using Artomatix Infinity Tiles with either Unity or Unreal. We’re in discussions with other engines, but everything’s still under NDA. We are working with AAA developers directly as well as pioneers of VR outside of games. Unfortunately our lips are sealed with NDAs.

Can you tell us more about the technology’s Seam Removal and how it intelligently parses PBR textures together in a natural way?

Sure, the best way to talk about our Seam Removal technology is to frame it against the current approach that people generally use. If you’re an artist and need to remove a seam, you would use the clone stamp tool in Photoshop to copy and paste patches from the middle of the texture into the border regions, blending the borders of a patch in with the background texture. Procedural methods (known as “texture bombing”) are essentially a mechanized version of this process where patches of texture are randomly “bombed” all over the seams and blended in. This can sometimes work for highly stochastic textures, e.g. grass, asphalt… This approach fails on anything with structure, that’s where Artomatix saves the day.

Texture Bombing isn’t aware of the content of the texture that it’s working on. So it just does the same operation regardless of the input. In contrast, our method is “smart” in that it looks at the structure of the texture it’s going to synthesize; it learns the patterns and adapts itself to the structures it finds in the texture. During synthesis it doesn’t just copy patches; rather it re-builds the texture at the pixel level so that the overall look and structures will be statistically similar to the input. Our approach doesn’t see pixels the way humans see pixels, as colors, normals and displacements… rather our technology just “super pixels” containing all of this information at once, so when it builds new materials it does so taking all information into account. That’s the key to synthesizing new PBR textures in a natural way.

To what extent can PBR textures be mutated? Can you tell us of some instances where new textures were created as a result?

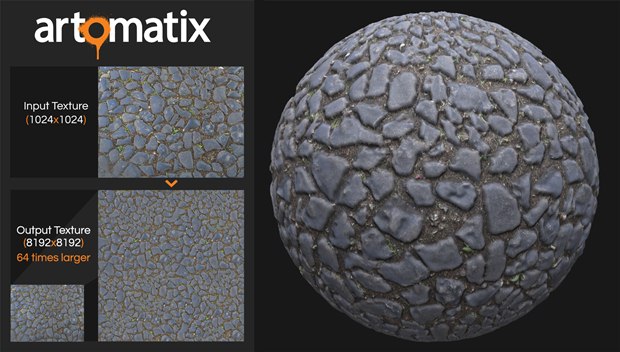

With our Texture Mutation, textures can be organically “grown” up to 8k in size. In fact, this limit is entirely imposed by hardware limitations, so you may see even larger outputs in the future.

In any case, a picture is worth a thousand words, so here’s an example of our mutate feature in action. We created a new instance of this cobblestone texture, 64 times the coverage of the original as well as self-tiling as a bonus.

"The need for 3D content is about to experience a state change. The 3D market will almost triple in the next six years, which, coupled with decreasing unitary asset price, means people will need a LOT of assets."

What is the current outreach of Artomatix to smaller developers?

Ongoing and always increasing. I would even go as far as to say that most of our growth, understanding and development as a company stems from our relationships with smaller developers rather than the bigger studios we’ve dealt with. Smaller developers are all unique in their own way; each with a different dream of what they want to create. Speaking with them and understanding what we can do to help them best achieve their goals has always been a driving force behind our own growth and technological advancements, so I guess that you could say our outreach to them is pretty extensive.

How much reduction in production overhead has Artomatix had for some developers? What kind of potential does it hold for future triple-A development?

The need for 3D content is about to experience a state change. The 3D market will almost triple in the next six years, which, coupled with decreasing unitary asset price, means people will need a LOT of assets. Tie this to increasing labor costs in countries that have relied on a cheap workforce to offer outsourcing services and you have the recipe for a big headache!

It is our firm belief that AI will play a key role in addressing this booming need for content. Need to texture an endless 3D world? Use our Infinity Tiles. Need to adapt your assets to a certain style? Use our 3D Style Transfer (in development). Need to create endless variations of certain assets? Use our 3D Hybridization (in development). Implemented right, these features are bound to have a lasting impact on the industry, as it lowers the cost of 3D assets creation by an order of magnitude.

So to come back to the question: it’s not so much about reducing the production overhead as it is about addressing the strong need for content.

As for the potential Artomatix and AI applied to 3D content creation has in the industry: we believe it’s enormous. With the right ingredients and a lot of effort, we believe the industry will be able to create highly detailed, immersive 3D world in a fraction of the time it needs to today.

What kind of performance challenges have you had to overcome for Artomatix’s smooth operation? Have there been any specific problems that developers have faced?

When we started, our core algorithms were implemented using a normal computer processor. To generate a single texture took roughly half an hour. We have since ported our code to run on GPUs, and we can now perform this same task in mere seconds.

The reason for this is that our algorithms involve performing millions of small calculations, across all the pixels in the image. When we run them all in parallel on the GPU, this is much faster.

"The only difference between the PS4 and PS4-Pro is a GPU with double the cores. Personally, I don’t think of the Pro as an upgrade, I think of it as the “Virtual Reality edition” of the PS4."

What are your plans for the future with Artomatix?

What wakes us up every morning is the desire to have a positive, lasting impact for as many people as possible with Artomatix’s technology. We believe we’ll reach this goal if we bring to market a radically new way of creating immersive experience, one that hinges on curation rather than tedious, floor to ceiling creation.

To reach this big, ‘hairy’ goal we certainly have a few steps along the way, such as:

- Release an Application version of Artomatix. Our v1 runs in a web browser using WebGL. Our goal was to get this technology out into the world with as few barriers to entry as possible. Unfortunately we had to make a lot of usability compromises when working in a browser. Moving forward we’ve been getting a strong demand for a more robust system that’s more integrated into standard production pipelines. We’re currently building a v2 of Artomatix that you can install on your machine. It will still be cloud based as the process needs really powerful GPU’s at certain points, but overall the system should be more stable, easier to use and a lot faster!

- Asset recycling. This is a bit of a paradigm shift. Currently when you’re making a game you have to make all your assets from scratch. A lot of games will have a unique art style, which means you can’t recycle old assets from previous games; they just won’t fit the style. Alternatively if you’re making a realistic looking game, you still generally can’t re-use old assets because they’re low resolution or follow outdated standards. We’re working on two new technologies which are going to change all this, “Style Transfer” and “Super Resolution”. In the future you’ll be able to grab 90% of your assets off of Turbosquid or the Unity asset store and import these assets into your game whereby the resolution and style is automatically updated to fit nicely in your virtual world.

- Bring 3D Hybridization to market. This is the ability to automatically create shapes based on examples. This is a technology that we’ve been developing for a while now which we believe is key to accomplishing our vision.

"I realize there’s a lot of marketing hype surrounding these new upgraded versions of each console, but I think the improvements are very incremental and mostly just mid-term updates focused on supporting VR devices."

What are your thoughts on the PS4 Pro and Xbox Scorpio?

I believe that three recent technology factors or market shifts have led to these “Pro and Scorpio” upgrades. These are:

(1) The introduction of VR as a consumer technology.

(2) The widespread uptake of 4k TVs.

(3) The recent launch of a new generation of GPUs based on a much better/faster/cheaper underlying technology. This means that console providers can double GPU performance without increasing costs.

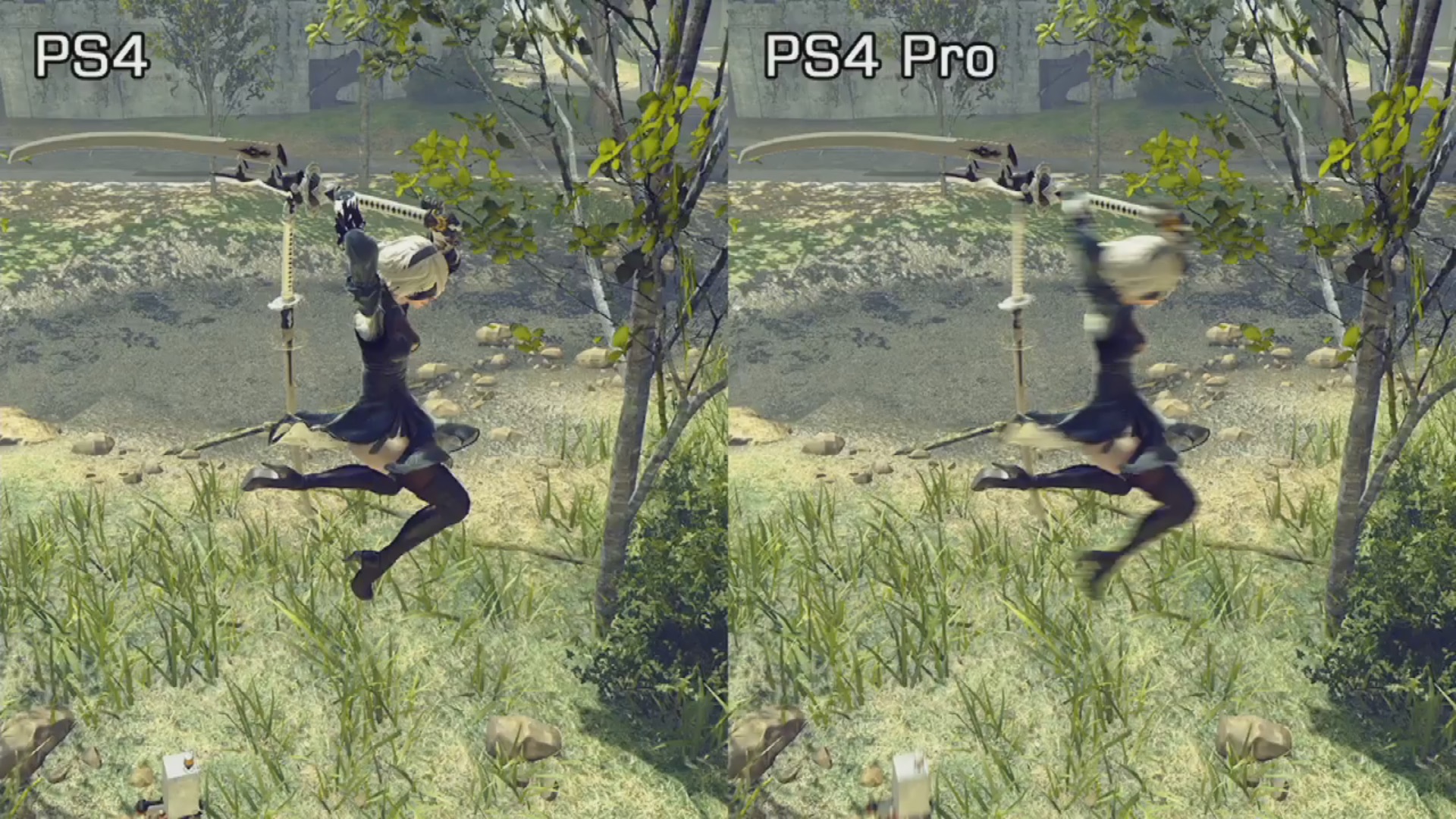

When gaming on a monitor, your console only needs to render one picture at a time. When gaming in VR though, your console needs to render two pictures at the same time, one for each eye. This is essentially the big computational cost of switching to VR, you need double the GPU. The only difference between the PS4 and PS4-Pro is a GPU with double the cores. Personally, I don’t think of the Pro as an upgrade, I think of it as the “Virtual Reality edition” of the PS4. While the Scorpio specs aren’t released yet, I suspect they’ll follow the same trend as the PS4… they’ll at least double the compute cores so you can play Xbox in VR.

So what does this extra processing mean for gamers that aren’t interested in VR? It could mean one of two things, you either keep the quality of each pixel the same and increase the number of pixels (i.e. 4k), or you keep the number of pixels the same and increase the quality of each pixel.

4k essentially has the same problem as VR; you need to render a lot more pixels. VR is a 2x increase while 4k is 4x the number of pixels a console was designed to render. The PS4 can’t do true 4k rendering since they only doubled the number of cores in the GPU. Instead they do a “checkerboard” upscaling approach to hallucinate the detail, which is probably a topic of discussion all on its own.

I think the interesting scenario is where players choose to use their “Pro” consoles on a 1080p screen, because now game developers can actually improve the look of their games. This is common in PC gaming where the player can choose their own “render settings” to choose how good the game looks.

Unfortunately it’s too early to tell what the demand will be for better looking console games and whether developers will bother to offer improved graphics. I think Mark Cerny’s quote that porting from PS4 to PS4 Pro will be a trivial effort is probably accurate. The only component that’s changed is the GPU and they’ve essentially just increased the number of cores rather than changing the underlying architecture. Assuming developers build their Console game on one of the leading commercial game engines (e.g. Unity, Unreal); they’ll have a lot of built in off-the-shelf options for improving the look of the game without having to do any additional work themselves.

For example, anti-aliasing, motion blur, bloom effects, Parallax-Occlusion-Mapping are all graphical improvements that can be turned on or off just by flipping a switch in the code. In the PC world we give the players the option to choose these settings themselves based on what GPU they have and what frame rate they’re comfortable with. I think in consoles it will be on the developers to choose the two pre-sets based on which console they detect.

"4k essentially has the same problem as VR; you need to render a lot more pixels. VR is a 2x increase while 4k is 4x the number of pixels a console was designed to render. The PS4 can’t do true 4k rendering since they only doubled the number of cores in the GPU. Instead they do a “checkerboard” upscaling approach to hallucinate the detail, which is probably a topic of discussion all on its own."

Typically, when we see a boost to graphics it’s usually part of a new console generation which also sees an update on CPU and RAM. In general, a stronger GPU means you can display more stuff on screen, which means you need more memory to store that virtual world and a better CPU to handle all the A.I. and physics for this now more complex environment.

I think one instinctual reaction is to see the GPU power double while all other specs stay the same and wonder if there rest of the console will be able to keep up. It’s important to remember that this GPU boost is just a reaction to VR headsets and 4k TVs and it isn’t intended to give developers the option to build bigger or more complex games. Once games are made that require stronger hardware (e.g. faster CPU, more RAM), they will no longer work on the original console, which would effectively make this a new generation instead of a new edition.

I realize there’s a lot of marketing hype surrounding these new upgraded versions of each console, but I think the improvements are very incremental and mostly just mid-term updates focused on supporting VR devices. If we look back far enough, we’ve actually seen this sort of thing happen before.

In the early 90’s compact discs became mainstream and new consoles like the 3D0 were released around this technology, offering video cut-scenes and high quality music in games. In order to stay competitive Sega released the Sega CD add-on for their Genesis console. Nintendo was planning on the same and partnered with Sony to develop a CD add on for the SNES. Ironically, after a good deal of drama, Nintendo dropped this project and Sony decided to keep working on what would soon become the first PlayStation.

The reality is that everyone was caught off-guard by the Oculus rift Kickstarter campaign kicking-off the rise of VR. Now Microsoft and Sony are in an awkward position. If they were to support VR with the original console they brought to market, players would see a big drop in graphics and probably migrate over to PC or worse, allow a gap in the market for a new direct competitor to emerge. In a perfect world VR would coincide with the end of a console cycle and they could just design the next generation for VR from the ground up. Unfortunately, at a mere 3 years old, neither console is ready for retirement yet. I think offering a mid-term “VR edition” is a sensible compromise. Of course, there’s going to be a

lot of marketing hype over TFLOPs and introducing the “most powerful console ever”, because that’s what marketing people do. Personally, I’m not taking the hype too seriously.

What is your take on Sony’s Checkerboard technique for 4K rendering versus native 4K rendering that Microsoft are espousing with the Scorpio? To the naked eye, what will the difference be? And what are the differences from a development and programming perspective?

The Checkerboard technique shouldn’t be too much trouble to program as it’s just a simple post-process. They essentially just render half the pixels in a checkerboard pattern and then fill in the blank pixels by blurring together the rendered pixels. People have been doing tricks like this for decades. As for how it will stack up against true 4k rendering. I honestly can’t say without looking at a few games being played side by side. Obviously the quality won’t be as good; the question is if it will be noticeably bad.

As an expert on the topic of “upscaling” or hallucinating enhanced details from low resolution images, I’m a little disappointed that Sony would go with something simple and outdated like the Checkerboard approach. I wish they’d worked with a company like Artomatix which has expertise on this topic. We’ve been developing upscaling technology utilizing neural networks which is years ahead of the traditional methods. Here’s an example of a 4x upres (so 16x the number of pixels) we performed on Link’s shield:

"The idea with our upscaling technology is to recycle those old assets for the next generation."

Although the nerd in me is a little disappointed, I can’t argue with Sony’s logic for going with a signal processing strategy, they’re reliable and safe, it’s what people have been doing for years. That said, I think in the long run, better quality and performance can be gained by using neural network upscaling.

Here at Artomatix we’ve developed our upscaling technology for a slightly different application. Our focus is upscaling old game assets so they can be re-used in new games, rather than being re-made from scratch. For games like Tomb Raider as an example, the developers have invested a lot of time and money building a library of textures: tree-bark, rock, grass, etc. which they mostly have to abandon when moving to a new console generation that can handle higher resolution textures.

The idea with our upscaling technology is to recycle those old assets for the next generation. Also, classic games like Ocarina of Time or Shadow of the Colossus could be remastered with better game art, not just rendered at a higher resolution. We’re also exploring our upscaling approach for old video footage. Imagine if the Simpsons from the early 90’s could be automatically re-synthesized to look like the latest season.

I think the same idea could be used for consoles in the future. By using advanced real-time upscaling techniques, it should be possible to actually decrease the number of pixels that are currently being rendered, while getting higher resolution at a better visual quality. Our peers at Magic Pony Ltd. were working on a similar idea for utilizing neural network upscaling on streaming internet video; they were recently bought by Twitter for $150m.

"The Switch could be a great success or a huge disaster… I guess it all depends on how well they execute on the concept."

What are your thoughts on the Nintendo Switch? What unique challenges will a system like that would pose for game development?

I think it’s still a little early to form an opinion on the Switch. At the moment, I’m more surprised by it than anything else. Traditionally consoles resemble mid-range PCs when they launch. The Switch however looks more like a beefed up cell phone… which has huge implications for developers.

When you make a game you generally want to support as many platforms as possible, because that gets you access to the most customers and thus the highest return. PS4, Xbox One and PC are all very similar machines under the hood so if you’re making a game for one, you generally make your game for all three. With the Switch running on mobile hardware and probably the Android OS, I can see developers treating the Switch as a mobile device rather than a console. I can see a lot of Switch games probably getting re-leased for Android devices rather than PC… assuming Nintendo doesn’t try to block that legally.

Do you think Nintendo Switch being less powerful than PS4 and Xbox One will matter in the long run for the new console?

I don’t think it matters. Nintendo hasn’t been winning the graphics race since the N64. I think the Wii was significantly under-powered relative to the PS3 and Xbox 360 without gamers being too bothered by it. Nintendo finds other ways to offer an entertaining experience and I for one really appreciate their willingness to innovate.

The one thing I’ll say is that Nintendo does take a big risk when they try something radical and new, sometimes it pays off (e.g. the Wii) and sometimes it doesn’t (e.g. the Wii U). They’re making a big bet that gamers will want a console/tablet hybrid thing, while also risking their relationship with 3rd party developers by making a console that’s weird and thus harder to develop for. The Switch could be a great success or a huge disaster… I guess it all depends on how well they execute on the concept.