One of the biggest challenges that developers face these days is that how much texture budget they call allocate to games. If you keep the increase in GPU/CPU power on one side and the increase in RAM/Memory on one side, you will find that the latter’s rate of growth is much slower than the former’s. The new consoles i.e. the PS4 and Xbox One have 8GB of memory, which is almost 16 times more than what was found in the PlayStation 3 and Xbox 360, however it won’t be a big surprise if artists and designers once again start running out of memory. Many of the upcoming games like Watch_Dogs already have a minimum of 6GB memory requirement. So it’s best to assume that memory will eventually become scarce and developers will once again face the challenge of optimizing their code and work on better tools, resulting in increased development costs and time.

Then there is also the issue of creating a viable technology model for delivering games digitally. Games are not becoming smaller in size and some of the upcoming games are pretty close to 50GB. Studies shows that due to limited bandwidth and internet speeds, many online gamers drop the download about half way through. This is especially relevant in free to play games where the experience is delivered digitally.

It’s safe to assume that with the advent of new technologies, the challenges haven’t decreased but instead have become more complicated. This is where Graphine Software, a Belgian based company comes into the picture. Graphine Software provides Granite SDK, a middleware that offers high definition texture streaming and compression techniques. Granite SDK drastically reduces the disk storage footprint of your existing texture images resulting into lower memory consumption and decreasing size, essentially solving the two problems we discussed above.

So how does this entire thing work. In order to know more, GamingBolt got in touch with Aljosha Demeulemeester, CEO of Graphine Software.

According to Aljosha, Granite SDK comes packed in with several benefits and it helps artists to stream texture data extremely fast.

“Granite SDK is a middleware that handles texture streaming from disk into the (video) memory. You can use it with classic streaming approaches (asset bundles, per texture or per mipmap level) but you get the most benefit out of using virtual texturing. The middleware provides everything you need for this.

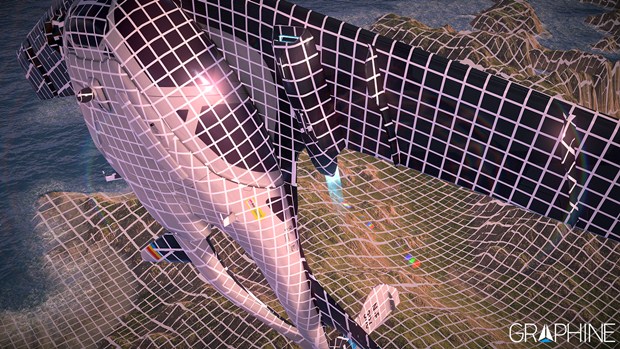

They achieve this via a method called as Virtual Texturing. For those who don’t know what Virtual Texturing is, it’s a process of dividing your textures into small tiles and using only the required ones in the GPU. This results into less strain on the GPU as it’s no longer rendering the entire texture data but only those that are require in a screen frame.

Aljosha details this process by taking an example of 128X 128 tiled setting.

“We call Granite SDK a fine-grained streaming system because we load very small tiles of texture data. This is happening in the background while playing the game. The size of the tiles is configurable but a commonly used setting is 128×128 pixels. When using virtual texturing, you will only load the texture tiles into memory that are actually viewed by the virtual camera. The main benefit is that you can save on the amount of video memory that you need for you texture data, but there are a bunch of side benefits. Loading times are reduced and disk access is more constant and predictable. The bottom-line is that you can really increase the graphical fidelity of games, while staying within the limited of the current hardware.

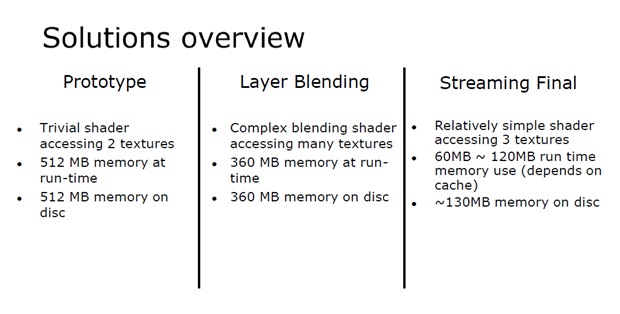

Granite SDK has been efficiently used by Larian Studios in Divinity Dragon Commander. Using the SDK, Larian Studios were able to upscale the 16kX16k landscape textures to 32kX32k and in some cases even more. This bought down the memory usage to 66%, a full reduction by 34%. The case study is indicated below.

Aljosha further explains how the integration happened with the SDK.

“The game ‘Dragon Commander’ from Larian Studios, which released in August 2013, was the first game that shipped with the Granite SDK. We were able to integrate the SDK relatively late in the development process. They used it mainly to cut down the memory usage (-82%) and the size on disk (-66%) of their 16K x 16K landscape textures. Most games that use the SDK right now are in an early stage of development and won’t release before 2015. We hope to be able to share some titles soon. Right now, I’m afraid we’re still bound by confidentiality.

If Larian Studios were to implement Layer Blending, the reduction in texture memory would have been roughly 29% but the final texture memory reduced by almost 66% using the Granite SDK. This is because the middleware actually decouples the amount of texture memory required from the virtual environment. So even if the developer needs to improve the textures furthermore, the need for memory does not increase. Aljosha details how this was made possible:

“Game developers, and the 3D artists involved, have a certain vision about their game. One of the challenges of game development is fitting their vision within the boundaries dictated by current hardware. For texture data, one of the important resources is video memory. The larger the video memory, the higher the resolution of the texture can be and the more unique texture assets that can be used within a virtual environment.

“Using virtual texturing, we decouple the need for texture memory from the amount of texture content present within the virtual environment. This means that the need for memory does not increase when an artist uses higher resolution textures or more unique assets. In general, streaming techniques have this kind of benefit. However, in practice, artists needed to be careful about the amount of texture data that they apply in each area of the 3D environment. A system that streams small tiles of texture data – like the Granite SDK – allows artists to use much more texture data for their objects and environments without much thought about the data size in memory.

“The SDK decreases the need to downscale texture assets to optimize the final game for shipping by hand. Also, it is a lot easier to create different quality settings for your game. With a single parameter in our system, you can increase or reduce the texture quality when running a game. This affects the amount of memory needed for the texture cache in video memory. This cache contains the texture tiles and remains constant in size when playing with a certain quality setting. The size of the texture cache depends mostly on your video resolution settings and not on the amount of texture content in the virtual world. The end result is of course more detailed and more diverse virtual environments within games.

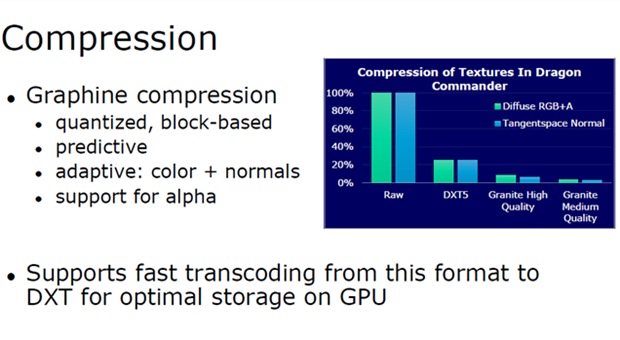

But this obviously raises the question whether the compression is loss-less as practically speaking there is no system in place that can provide a flawless compression. Furthermore how does it provide a benefit over the industry standard zlib?

Aljosha believes that their compression technique is aimed at the different types of texture data like color, normal and specular.

“Because we take the data type into consideration, we can compress it better without introducing noticeable compression artefacts at our ‘high quality’ setting. The compression format is scalable so that you can select a quality level (and thus, compression level) that is appropriate for each texture asset. Zlib doesn’t give you the same advantages,” he further explains.

The SDK has been around for a while and the more recent update, version 2.2 offers a more robust solution compared to version 2.0. It also includes new user interface for better developer tracking and the implementation of HDR support.

“Granite 2.0 saw the addition of our Tiling back-ends. These are modules specific for each graphics API (e.g., DirectX 11, OpenGL, etc.) that take care of the handling of texture data at the graphics API side. The Granite core is API independent but we realised that setting up caches, translation tables and uploading tiles can be tricky. It takes quite some time to get this right. We’ve added our tiling back-ends to provide our knowledge in this area to our customers in the most accessible way: ready to use, optimized implementations. Most notably, our DirectX 11.2 backend allows you to have hardware accelerated virtual texturing (tiled resources) out of the box.

“Our latest release, Granite SDK 2.2 now has performance statistics gathering and visualization, a significant revisit of our tools and support for HDR textures. All these additions will help developers to customize their streaming approach and the quality of their textures. We’re making it easier than ever for developers, using our technology, to fine-tune and optimize the performance of their game. Capturing and analysing performance statistics is essential to attain optimal performance. Granite SDK now provides the necessary functionality to monitor the streaming metrics so developers can ensure that the system plays nice with the rest of their application. We’ve revised our tools to make them integrate even easier in a production pipeline and engine.

“The build speed of streamable texture files (our Granite Tile Set files) has significantly increased. Most importantly, our import tool now allows for incremental updates that ensure a short time span between modifying an individual texture asset by an artist and having an updated Granite Tile Set file. Such a file can potentially contain thousands of individual texture assets. With update 2.2 of the Granite SDK, we now also support the use of HDR textures. This will enable an entirely new spectrum of techniques for more accurate lighting.

Microsoft’s demo of tiled streaming and hardware acceleration through DirectX 11.2 using Granite SDK. The results were simply unbelievable.

Shifting the topic to the new consoles, the Xbox One has an extremely fast memory in eSRAM which can reach up to a bandwidth of 204 Gb/s. There is this potential of using DX 11.1 along with eSRAM to achieve better results for PC and the Xbox One. The PlayStation 4 supports Partially Resident Textures which is the equivalent of tiled streaming using DX 11.1 for the Xbox One. Both of them are collectively called as Hardware Virtual Texturing. Aljosha explains how Granite SDK uses these two different APIs in order to maximize its potential on consoles.

“The Granite SDK supports both software and hardware virtual texturing. The latter is also called “Tiled Resources” in DX 11.2 or Partially Resident Textures in OpenGL. The benefit with hardware virtual texturing is that the hardware now takes care of filtering across tile borders as well as fetching the correct pixel from the cache. This makes the shaders less complex and faster to execute. Another big advantage is that you don’t need to add pixel borders to your tiles so that the memory usage can be reduced. The Granite SDK will automatically switch to hardware virtual texturing if it is available on your system, or it will fall back to software virtual texturing if it’s not.

At this year’s Game Developer Conference, Microsoft announced the next version of DirectX, namely DirectX 12, will be hitting next year across mobiles, Xbox One and PC. DirectX 12 will supposedly reduce CPU overload by 50% and aims to remove bottlenecks, especially for dual GPU configurations. Aljosha believes that this could definitely have a positive impact on the SDK’s performance.

“DX12 continues to build on DX11.1+ and as such, also includes the Tiled Resources feature. DX12 is however closer to the metal and gives more control to the developer. This allows us to further optimize our tiling backend (for DX12) that takes care of loading texture tiles into video memory. Also, we get more access to how data is stored in video memory, which is very important for us.Specifically for our tiling backend, we expect a performance increase compared to DX11.1+ but we don’t have any hard numbers on this yet.

But where does this leave AMD’s Mantle? Mantle is a low level API that gives a more console like access for PC games development. Technically speaking, Mantle provides direct access to the GCN architecture resulting into superior draw calls. And again, just like DX12, the SDK will see benefits when used with Mantle.

“We are always excited to see new technologies emerge. We love low-level access through an API-like mantle because then we can get the most out of every system. This is essentially the reason why the old generation consoles could render decent graphics, even years after their initial release. Having more low-level access to the GPU does make it more difficult to program for. But, for texture streaming at least, we aim to provide a highly optimized system that is easy and quick to setup for a game application.

Perhaps the biggest benefits of using the Granite SDK is less taxation on the GPU side of things. This is especially relevant in case of PS4 and Xbox One who have far less compute units available [18 and 12 respectively] compared to a high end PC GPU. So the question of whether those GPUs have enough compute units to fully utilize the potential of Granite SDK is irrelevant according to Aljosha.

“Tiled streaming does not really tax the GPU much, unless you’re decoding on the GPU. Support for this is not active in our current Granite SDK release. We are considering it because game developers love the flexibility of being able to choose between CPU-side decompression and GPU-side decompression. On the CPU side, decoding our tiles on the fly actually doesn’t take up much processing power. We spent a long time optimizing this!

But due to slightly different architectures, is the process of texture streaming different across the PS4 and Xbox One? Or is it simply the case of plugging in the API and let it do the talking? Aljosha explains the SDK has been optimized to take advantage of each consoles’ capabilities and it’s up to the developer to accordingly set up parameters depending on the game’s requirements.

“Granite SDK indeed takes care of all the platform specific stuff and makes sure that you’re up and running quickly on both platforms. Granite exposes a set of configuration parameters like the size of the texture cache in main memory, the amount of threads used for decoding, the maximum allowed throughput of texture data per frame, etc. A game developer will want to tweak these parameters depending on the behaviour of his/her own game, and based on the capabilities of the hardware of a specific platform. We provide fast implementations of the Granite runtime for both platforms so that only the values of these configuration parameters might change on the different platforms(from the game developer’s perspective that is).

“We’ve been working on the SDK for 4 years now. Every sub module of the system is constantly being improved upon to be faster and push the limits of what we can do on each platform.

Unreal Engine 4 is one of the most powerful engine available and surprisingly, Granite SDK has a plugin for the same. The SDK allows for textures up to 256.000 x 256.000 pixels, something which is simply not possible on other proprietary engines.

“For UE4, we actually have a plugin that allows you to easily use our SDK. The main benefit is a significant memory reduction and extra on-disk compression. Also, our system easily handles many large 8K textures in one 3D scene. Most 3D engines will find this difficult. And of course, the SDK allows for textures up to 256.000 x 256.000 pixels, something that is just not possible with most engines As we said before, the Granite SDK can be integrated into any engine and any production pipeline to extend its functionality with fine-grained texture streaming.

As far as bringing the SDK over for mobile gaming, Aljosha is apparently employing the wait and see approach.

“The mobile platforms are getting very powerful. We hope to be able to release Granite SDK for mobile soon. It’s hard to do any predictions in this area but we strongly believe that texture streaming and virtual texturing also have real benefits for mobile games

At the end of our interview, Aljosha believes there is a lot one can take out from the SDK but he remains optimistic as the technology is growing pretty fast.

“You can go a long way with the current Granite SDK. Streaming essentially solves memory limitations. However, storage becomes the next bottleneck. We have compression algorithms on disk that reduce the disk size of texture data between 60% and 80%. But artists will still be able to fill out a Blu-ray pretty fast. It is hard to predict how the industry will react to that.

Granite SDK is now available for PlayStation 4, Xbox One, PC, MAC, Linux. The SDK also supports the Unity engine. Screenshots can be found below.