Much has been made out of what can be achieved using DirectX 12 but there is a lot we don’t know about the API. Yes, it will reduce CPU overhead, which is something that will be a big win for players who play their games using dual GPU configuration. It’s also supposed to reduce GPU overload and improve performance by 20% and it goes without saying that prospects are simply amazing.

During last year’s GDC, Microsoft gave us a decent overview of what the next iteration of the API could do but they never really went inside the finer details. During this year’s GDC, Microsoft’s Max McMullen went ahead and gave the audience an in-depth look at the new features and performance improvements that the new API brings in.

First of all, the work on DirectX 12 has been largely completed with working drivers. There may be small tweaks due to the feedback that Microsoft will receive from developers but overall the current build is very close to the final version that they plan to ship later this year. Microsoft have fully functional and performant drivers across the three hardware vendors i.e. Intel, AMD and Nvidia hardware.

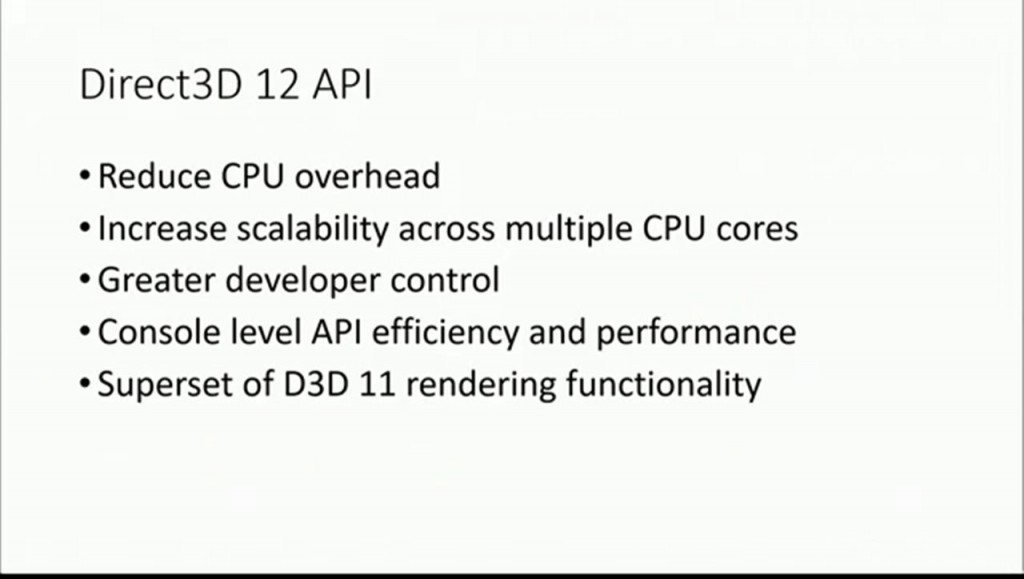

McMullen also revealed that DX12 capable hardware already comprises 50% of the current gaming PC market and that number is expected to reach 66% when the API launches this year. In terms of early access, over 400 developers from over 100 studios already have access to it. The API aims to give developers console level efficiency and control on gaming PCs and mobile and the biggest draw is that no rendering functionality has been lost and anything that developers can do on DX11 can easily be done on DX12.

Rendering features and overview:

A general overview of DX 12 capabilities.

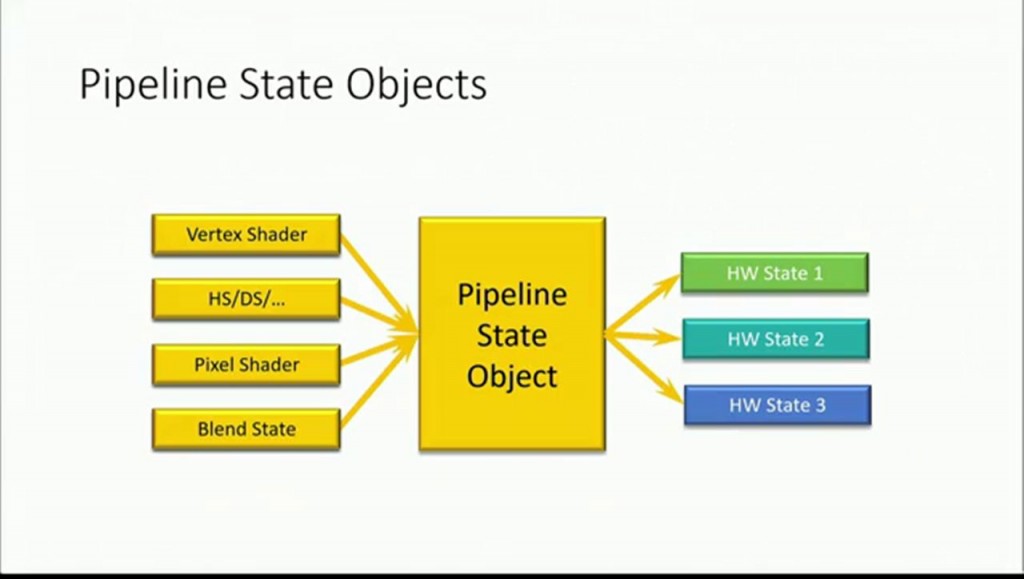

In DX12 all objects are in a single pipeline state object.

In case of DX11, there were several objects which resulted in drivers ended up compiling at run time during draw calls resulting into CPU overhead and hence reducing performance. During the analysis of DX12, Microsoft opted to combine all of these objects into single and coherent Pipeline State Object where the developers can take one compiled pipeline state and use it compile the rest of the hardware states at one go.

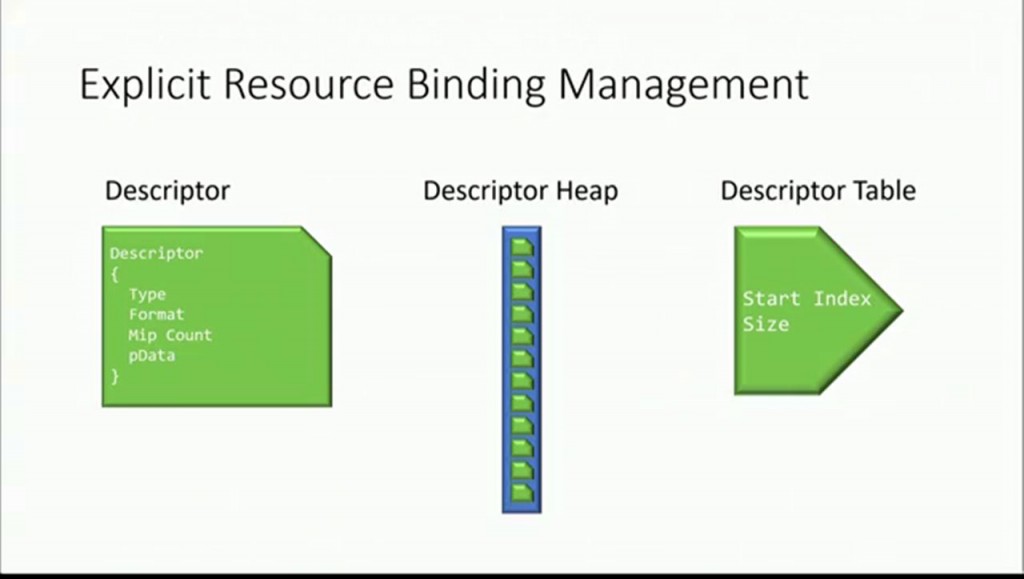

Resource binding management and descriptors.

DX11 was a pretty busy API where the developers were making lots of shader resource views and render target views. So this will result into a large overhead depending on the number of resources the developer plans to use. With DX12, Microsoft opted for an explicit memory model where the binding objects instead stored as structures stored in GPU memory. The developers can create a descriptor for a GPU and copy it from one location to another and all of these descriptors will be stacked together in a descriptor heap in the form of an array.

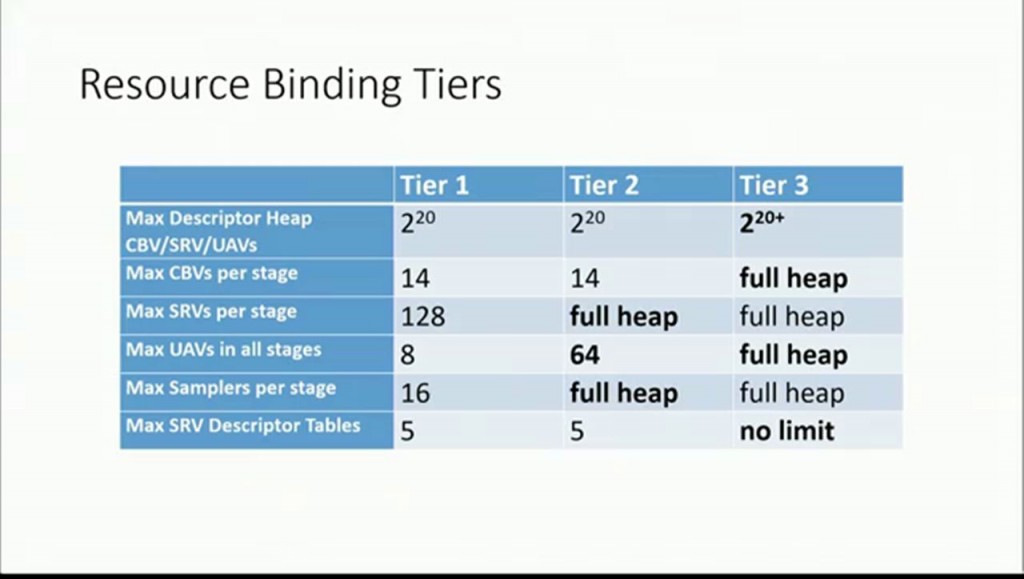

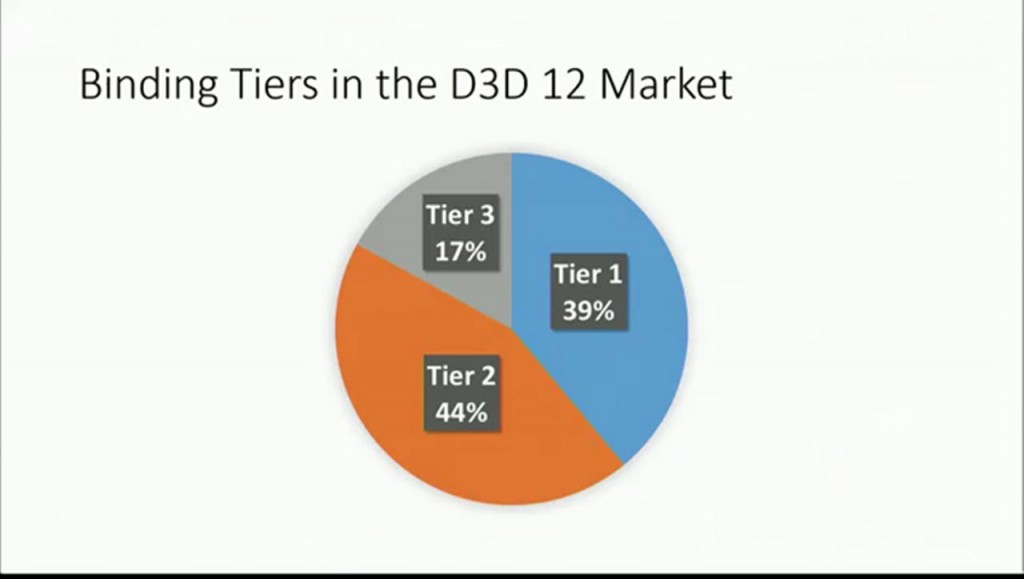

Resource binding tiers according to maximum supported size of descriptor heaps.

Market share of each tier.

Hazards such as issues in maintaining coherency in GPU are resolved using the ResourceBarrier API.

In order to maximize performance across GPUs, Microsoft have these tiers called as Resource Binding Tiers which are differentiated in terms of the number of descriptors heaps. The first tier is for early DX11 hardware and the third tier is for modern GPUs that allows for more descriptor heaps. The explicit resource binding makes sure that there are no resource hazards such as issues during maintaining coherency in GPU or rendering targets to and from the textures. For this purpose, the Resource Barrier API takes care of any such issues that may arise due to hazards.

One of the most exciting features of DX12, flexible pipeline parameterization.

Moving on, Microsoft spent a lot of time working on a flexible pipeline parameterization. Microsoft describes this feature as an awesome part and one of the gems that DX12 has to offer. Using this feature the developers can fully exploit CPU and GPU performance. Using this, developers can change the descriptors on the fly during draw. This is achieved using fast paths such as registers to pass the parameters to the pipeline. This is similar to the function used for shader pipeline which consists of a lists of arguments such as integers or floats and those get passed to the shader pipeline.

Explicit GPU and CPU synchronization results into freed memory.

In previous APIs, if the developer deletes a resource, the API won’t delete the video memory until the rendering commands are executed by the GPU which obviously resulted into more overhead. But as game developers know what is going to be deleted when, they would only need to synchronize them with the GPU and delete them as per the need. A good example that McMullen stated during the speech was the constant streaming of geometry between CPU and GPU. One can allocate a large chunk of memory which can be accessed by both CPU and GPU, write them into the buffer, render them and accordingly track when those rendering calls are completed on the GPU. Once they are complete, the memory can be used for any other purpose.

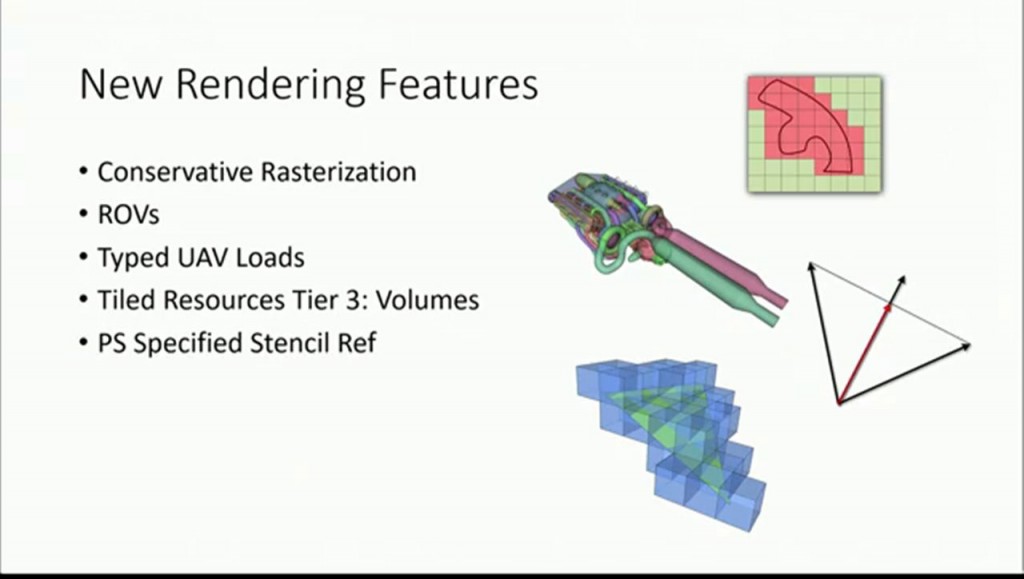

DirectX 12 introduces new rendering features.

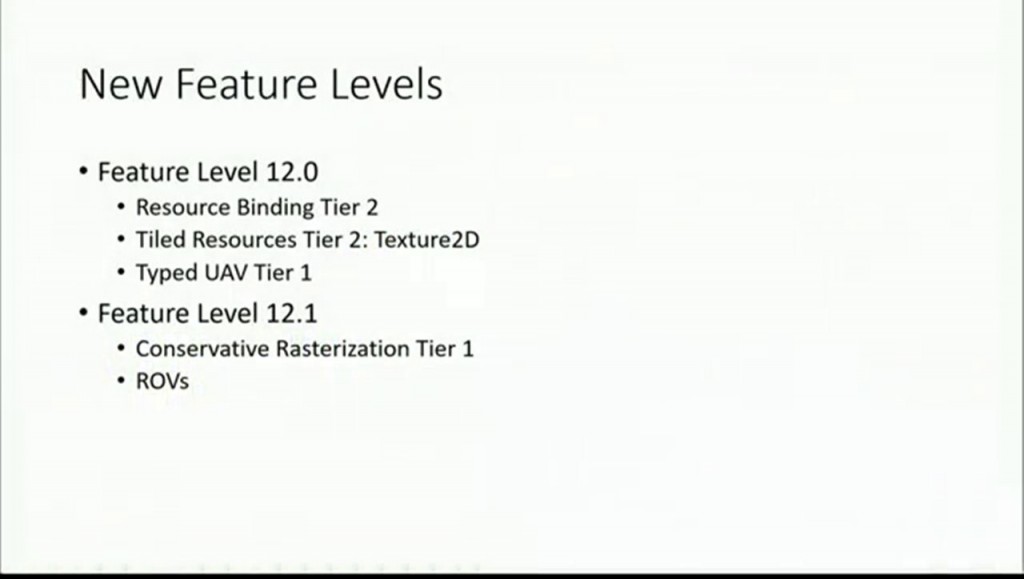

Some of the new features that DX12 introduces is Conservative Rasterization which can be used for occlusion detection, curve rendering and transparency. In short, developers won’t need to create workarounds to achieve the above. Secondly Tiled Resources Tier 3: Volumes such as sparse volumes can now be easily built on and you can specify the stencil value inside the shaders. New feature levels are extremely powerful along with CPU advantages such as pre-baking the binding and track materials.

Unity performance improvements:

Kasper Engelstoft, Unity’s Graphics Engineer revealed that they first got their hands on DirectX 12 SDK back in September 2014 and within two weeks they were able to render stuff using the new API. By mid January, they were able to achieve 95% success ratio in their internal tests. Engelstoft then proceeded to showcase a demonstration using shadow maps in the Unity Engine.

One of the first things that Unity looked in was to improve performance of shadow map rendering and using DX12 developers can get performance gains due to the API’s capability to support multi threading shadow map rendering. Engelstoft explained that the main goal is to move tasks such as shadow maps away from the main thread whilst freeing other threads for other tasks. Unity has this concept of a client and a master’s graphics device so they generate a command list that is called upon by the API on the client device. But as explained above, using DX12 it’s possible to generate multiple command lists on multiple threads, which means that developers can skip the intermediate lists which results into performance improvements.

The main thread then builds the main scene command list and while the other threads are working on shadow maps command list. This basically means that shadow maps rendering are longer dependent on the main thread that is working on the main scene and each draw call does not do a lot of things as basically it’s only working on shadows, leaving the main thread for other important things such as game logic or physics.

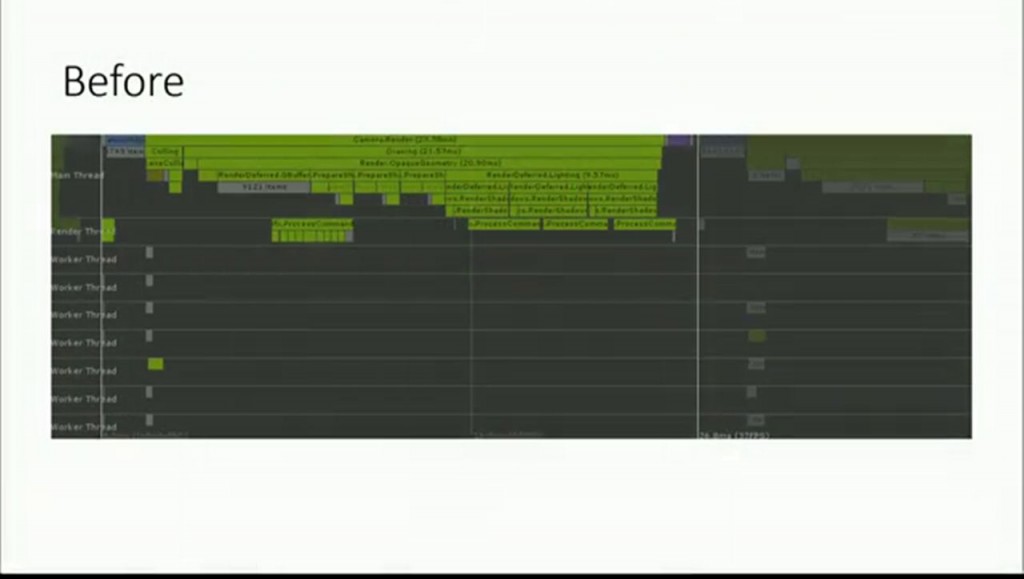

Frame time is 23 ms when Unity used their standard procedure to render shadow maps.

As shown in the image above, the shadow maps renders were placed on the main thread and the main scene itself consists of 7000 objects getting lit. Engelstoft revealed that it takes around 23 ms to render this scene.

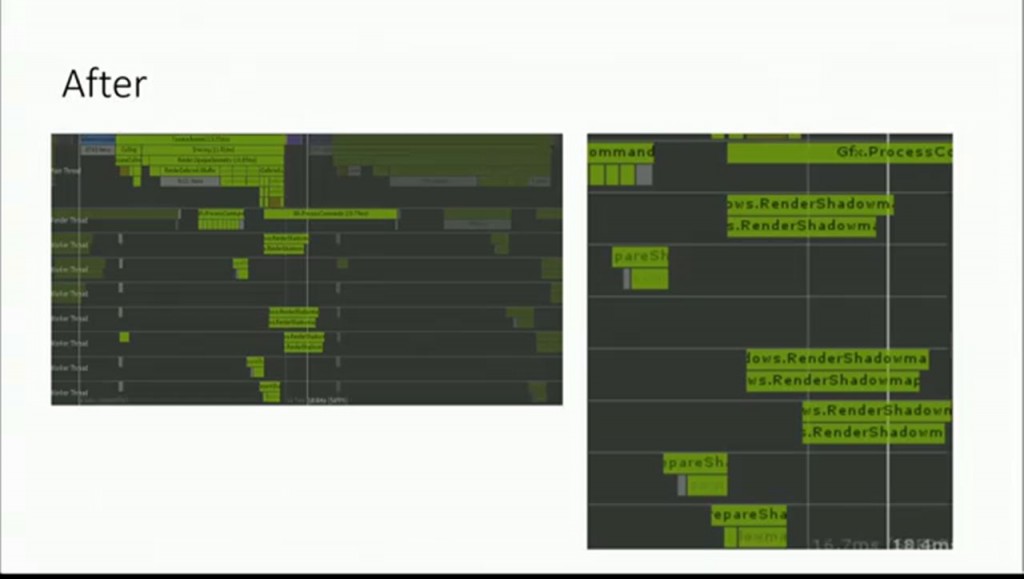

Frame time reduced to 13 ms using DX12 multi-threading capabilities.

Using DX12 the shadow maps have now been moved to other threads which resulted in a frame time of 13 ms. In this way the developer saved around 10 ms of frame time. Please note that this is did not happened magically as the developer had to think out of the box to get this working for them. This goes in line to what StarDock CEO Brad Wardell stated about the new API. So yes…developers would need to optimized their codes and thread strategies.

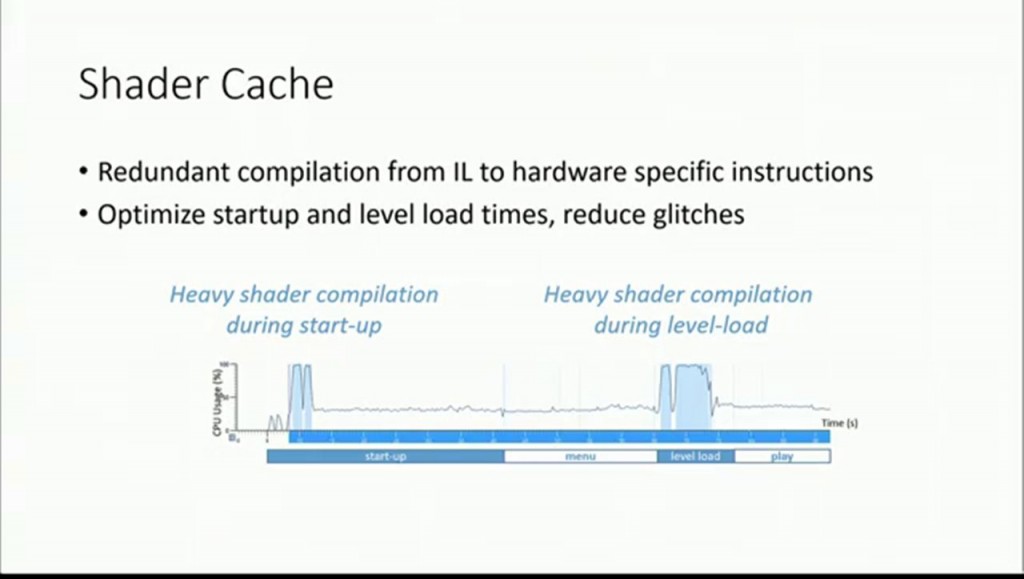

Shader Cache and ExecuteIndirect:

DirectX 12 comes packed in with developer controller Shader Cache. A typical frame has around 200 to 400 pipeline state objects and in some cases even 1000. The cache in DX12 is absolutely controlled by the developer. So if you want to develop a Particle Swarm Optimization (PSO), developers can specify a flag to cache the output and then make a PSO. The API will then give a fully compiled binary representation of all hardware instructions, so all the heavy weight and expensive computations would have been completed by then.

Something new to the API is ExecuteIndirect which replaces DrawIndirect and DispatchIndirect and can perform multiple draw calls with a single API call. The number of calls can be controlled by the GPU or CPU and the developers can even change the bindings between draw calls.

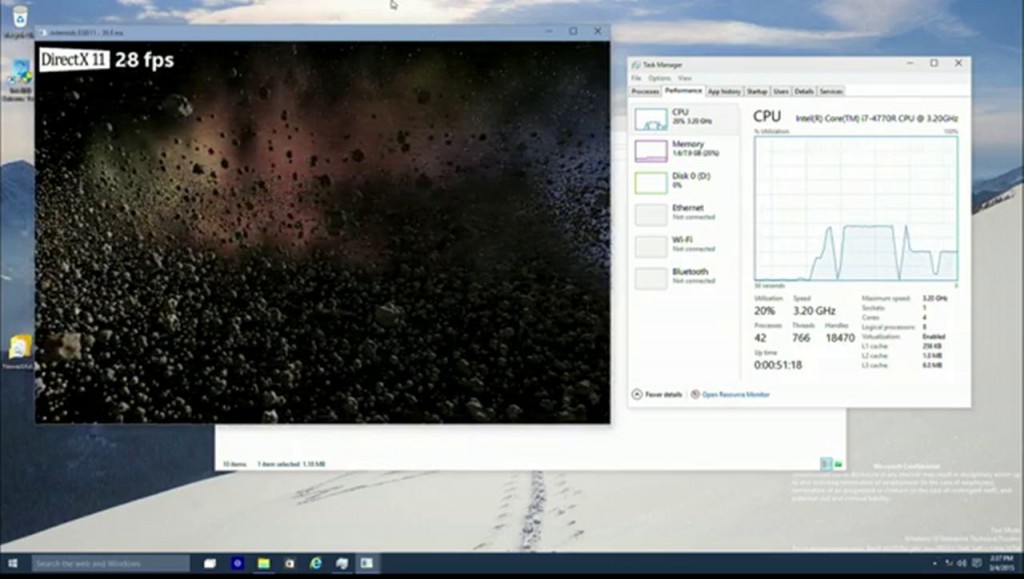

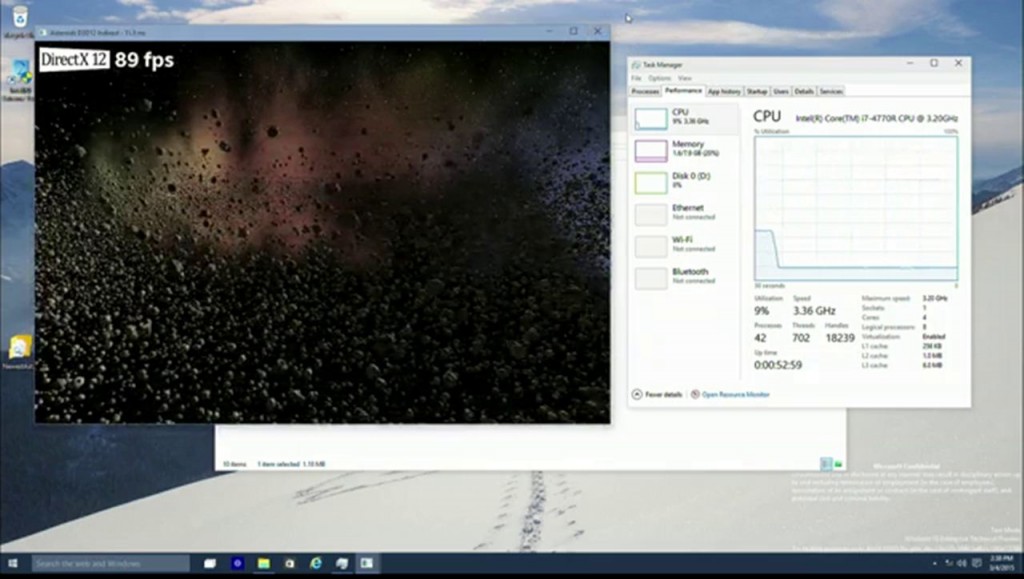

In order to show the advantages of ExecuteIndirect, Microsoft showcased an updated build of Intel Asteroid’s demo. Using DX11, the frame rate was around 28 with the average CPU usage at 20%. Please note that the demo is running in free mode indicating that DX11 can fully utilize the CPU and GPU.

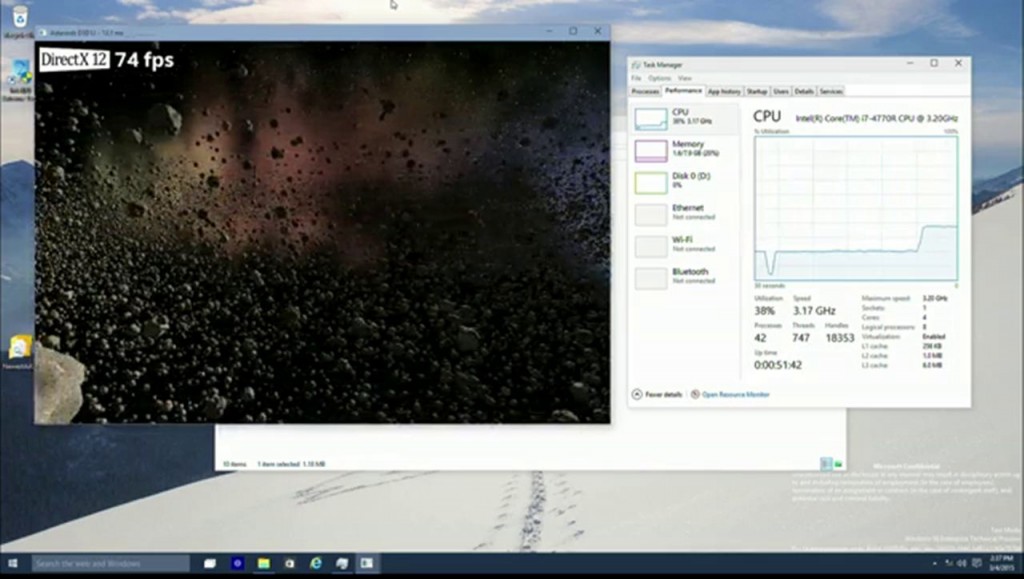

Switch to DX12 the frame rate almost triples to 73 fps.

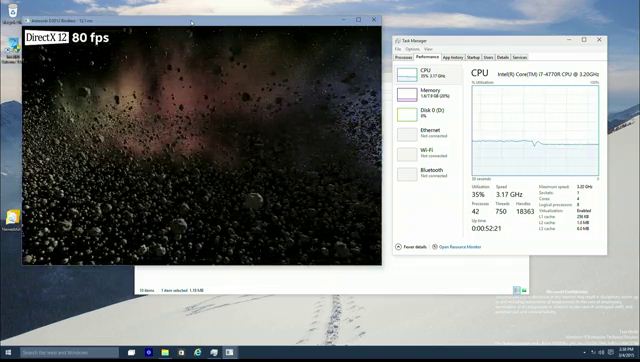

If the textures are prebaked on the asteroids, the results shows and improvement in frame rate up to 80fps and also some reduction in CPU usage.

Using ExecuteIndirect results into another 10% GPU performance improvement and the CPU usage was even brought down by several percentages.

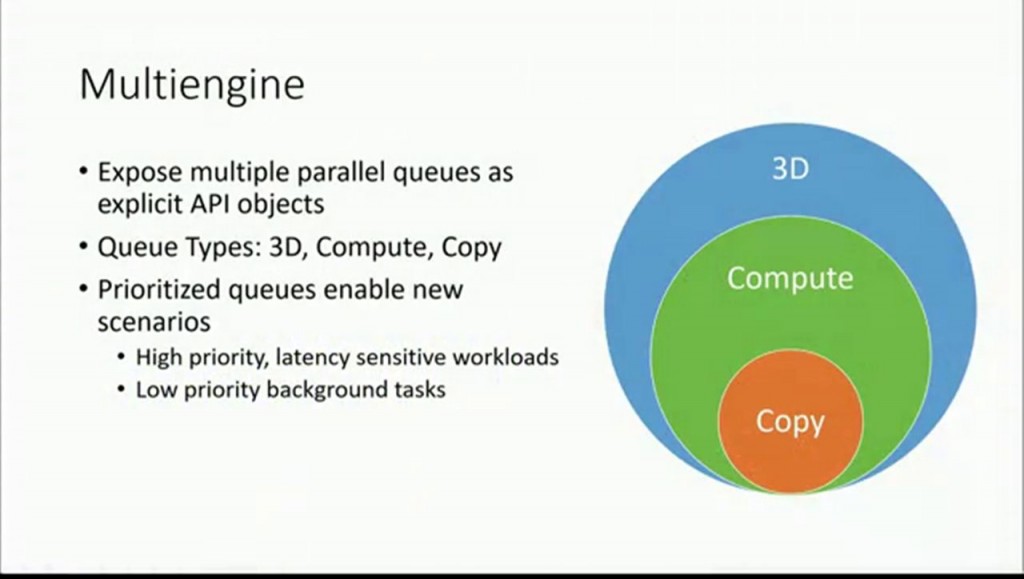

Multiengine:

DX12 does not have a monolithic queue like DX11 did but it does have queue types which are 3D, Compute and Copy. Copy is important in cases where the developer wants to do background texture streaming, compute is related to dispatch functionalities and 3D is everything that DX11 can do in batches. Using the queue structure, developers can prioritize between workloads or background tasks. Right now the priority system is simply based on low, medium and high but in the future the API may support real time and spatial priorities.

Fable Legends Performance Comparisons:

As you can see from the image above, the demonstration on the top is running on DirectX 12 at 1080p and that too at a higher frame rate compared to the DX11 build below which is running at a lower resolution.

In the second demonstration, the DX 11 build of Fable Legends runs at 40 odd fps but the DX 12 builds boosts it to 60 frames per second. [Check the frame rate below the average frame rate].

Closing Words:

It’s pretty exciting to see the potential of DirectX 12 unfold later this year and beyond. Modern GPUs and CPUs will definitely see massive improvements due to DX12 and the impact of ExecuteIndirect sounds extremely intriguing. As far as Xbox One goes, the console already has a low level API which is similar to DX 12 so the impact might not be as massive as it would have on PC platforms.

One cannot deny that the console is forever going to be limited by its static hardware specifications but such is the nature of these mid-priced machines. We have previously reported that DX12 might help Xbox One’s eSRAM bottleneck issue by making command streaming behind CPU and GPU easier and faster, and there is this potential of tiled streaming using the eSRAM’s high bandwidth. But this is speculation on our part and as it stands, the impact of DX12 on Xbox One is still a bit of mystery. Hopefully Microsoft will release more details specific to the Xbox One soon. For PC players, this is an exciting time to be a part of the PC master race.