Today’s industry, which demands cutting edge visuals and no compromise with the latest hardware, is built on the effort of individuals like John Hable. Originally a video games programmer, Hable eventually entered into motion capture and facial animation, working on notable releases like Uncharted 2: Among Thieves and briefly on Uncharted 3 and The Last of US. Nowadays, Hable is looking into further reducing the Uncanny Valley phenomenon that defines today’s visuals and facial animation, bringing gamers on step closer to a world completely unlike their own.

GamingBolt had a chance to speak to Hable about a number of different topics, including his take on the visuals of Uncharted 4: A Thief’s End – Naughty Dog’s PS4 entry for the franchise – and how important realistic facial animation will become for current gen consoles.

"My theory is that the Uncanny Valley is triggered by the Fusiform Face Area (FFA) rejecting the face. Basically, the area of your brain that processes faces is completely different from the area of your brain that processes everything else."

Rashid K. Sayed: Could you tell us a bit about yourself and what you do?

John Hable: The short answer is that I’ve started a company called Filmic Worlds, LLC where I’m scanning actors/models for use in video games. Going from raw scans to a usable face rig is a very complicated and labour-intensive task. So I’ve created a custom pipeline where you can bring in your talent and my service delivers a cleaned up facial rig.

Going back a step, I’ve been working in video games as a graphics programmer since 2005. My first job was with EA working on the UCap project for Tiger Woods 2007 followed by the Spielberg Project (LMNO) that was eventually cancelled. After that I worked for Naughty Dog on Uncharted 2 and some early work on Uncharted 3 and The Last of Us. Since then I’ve been in the games industry doing contract work.

But my real focus these days is facial animation. Two years ago I started doing some independent R&D on facial animation and presented a demo at GDC. At the time I was somewhat oblivious about how art assets were generated. For all I knew artists just did some work and then art assets magically appeared in perforce.

What I learned is that going from raw scans to a usable blendshape rig requires a tremendous amount of manual labour. Since the data is usually sculpted by hand it also loses many of the nuances in the original scans. That’s why I created a customized pipeline to create a face rig from raw scans. The data that I deliver is not touched by an artist. In theory, a facial rig made this way is cheaper because you do not need an army of artists and also higher quality because it preserves the details of the original scan. In practice things get complicated but that is the theory.

Rashid K. Sayed: You have previously worked in reputed studios such as Naughty Dog. What was the biggest takeaway from working in a studio that demands nothing but excellence?

John Hable: Working at Naughty Dog was a great experience. The main takeaway for me is that there are no shortcuts. Making great games requires very talented people and lots of hard work.

Rashid K. Sayed: There is a lot of talk regarding the term Uncanny Valley in games development. For our readers who are unaware about this term, what exactly is the Uncanny Valley in facial animations and scanning?

John Hable: The basic premise of the Uncanny Valley is that cartoony and/or stylized avatars are relatable. But as those characters start to look too realistic they become creepy. Something about them looks wrong which gets in the way of the viewer developing an emotional attachment to the CG character.

Rashid K. Sayed: We’ve read your article discussing the Uncanny Valley and how surpassing it requires FFA rejection. How much closer is the industry to faking the triggers needed for this?

John Hable: To give your readers the short version, my theory is that the Uncanny Valley is triggered by the Fusiform Face Area (FFA) rejecting the face. Basically, the area of your brain that processes faces is completely different from the area of your brain that processes everything else. This effect has been verified by studying people with brain damage. Some people have had brain damage to their FFA and they cannot recognize faces. This condition is called Prosopagnosia. They can recognize cars, handwriting, clothes, etc. but they cannot distinguish their family members from a random person off the street.

Other people have the reverse problem called Visual Agnosia. They have had brain damage to part of their brain but their FFA is still intact. These people can only recognize faces. They can see colours and basic shapes but they cannot recognize objects, read handwriting, etc. So my theory is that the Uncanny Valley is due to the FFA rejecting the image. If we can figure out what those triggers are and put them in our faces then we should be able to cross the Uncanny Valley even if they are not photoreal.

"The fundamental facial animation algorithm used here is absolutely viable on a PS4 or Xbox One. You could even do it on a PS3 or Xbox 360 if you were motivated enough. The geometry is nothing more than blendshapes and joint skinning. Some games have already shipped with vastly more complicated rigs. The animated normal and diffuse maps are not difficult either."

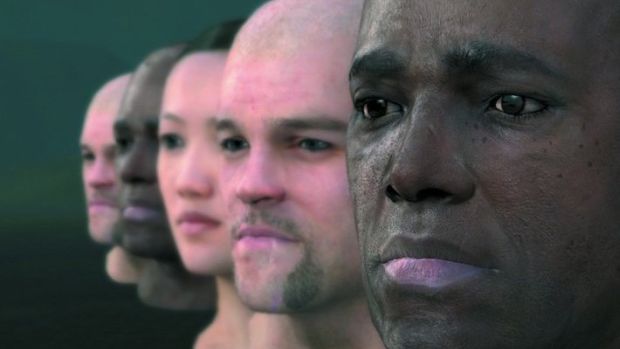

We can solve this problem by capturing an animated colour map of the human face. The most well known game that used this technique is LA Noire, although we did a similar thing for Tiger Woods 2007. If you capture those colour changes and then play them back in real-time then for some reason the face crosses the uncanny valley. The face is not photoreal. You would never mistake it for a photograph of a person. But that animated colour information is enough to make our FFA accept the face and thus cross the uncanny valley.

Unfortunately, capturing the colour changes during a mocap shoot is prohibitive for many, many reasons. Even though the quality is great, the technology has never really taken off because it does not fit with the production logistics of most games.

The solution that I’ve settled on is to capture some set of poses with colour changes, and then blend between those colour maps. I.e. you would capture a colour map for your model in a neutral pose, a colour map for jaw open, a colour map for eyebrows up, etc. Then you can animate your face using standard motion capture solutions and blend between those colour maps automatically. For example, as the character’s rig opens his or her mouth, we can blend in the colour changes from skin stretching in addition to moving the geometry.

Of course, I’m not the first person to experiment with this concept. The inspiration for this approach was the ICT paper on Polynomial Displacement Maps. ICT later created the data for Digital Ira, which was then used in the Activision and NVIDIA demos. Those were great research projects but they do not necessarily scale to the giant volume of data that video games need. That’s why I founded Filmic Worlds, LLC.

In the Fimic Worlds capture pipeline the talent gets solved into a set of blendshapes including both the geometry and the stabilized colour map. In other words, it’s a production ready solution to blend the colour maps along with the geometry. If we can do that correctly, we should be able to fake those triggers to fool our FFA and get past the uncanny valley.

Rashid K. Sayed: Going off of the demo reels showcased here, the various components listed would indicate that at least a PC game could handle this kind of face scanning in real time, barring the use of 8x MSAA. What other obstacles exist in providing this kind of realistic facial animation in video games?

John Hable: That’s a good question and one that I get quite a bit. The fundamental facial animation algorithm used here is absolutely viable on a PS4 or Xbox One. You could even do it on a PS3 or Xbox 360 if you were motivated enough.

The geometry is nothing more than blendshapes and joint skinning. Some games have already shipped with vastly more complicated rigs. The animated normal and diffuse maps are not difficult either. All you are doing is blending textures together in a pixel shader. The memory is relatively light as well. The high quality rig is 55MB of textures but you only need that much resolution if you get really, really close. You can easily get away with one less mipmap level which would reduce the texture memory cost to about 14MB. That cost is perfectly reasonable on PS4/Xbox One.

There are a few graphics techniques used which might be unreasonable on consoles. For example, 8x MSAA is not likely to happen. There are several tricks with spherical harmonics to prevent light leaking which would be difficult to use in a pure deferred renderer. But the animated geometry and texture maps are definitely viable on consoles.

Realistically, there is no major problem with having three or four heads animating in real-time in a cut scene. You would not want to animate a large crowd of heads of this quality but that is what LODs are for.

"About a decade ago the big question in CG animation was motion capture (mocap) versus hand-animation (keyframing) for full body animation. There was one camp that advocated capturing real actor performances and then tweaking the results."

Rashid K. Sayed: How important will realistic facial scanning become with the current generation of consoles?

John Hable: Facial scanning is becoming increasingly important for realistic games. I think mocap is a good analogy.

About a decade ago the big question in CG animation was motion capture (mocap) versus hand-animation (keyframing) for full body animation. There was one camp that advocated capturing real actor performances and then tweaking the results. And the other camp believed that you could get better quality by hand-animating every frame from scratch.

If you have a short 5 second sequence then you can get equally good animation quality either way. But if you have to animate hours and hours of realistic data on a tight budget then mocap is your only option. The philosophical debate is irrelevant. The war is over and mocap won. Of course animators are essential for processing, cleaning up, and artistically changing the data after it has been captured. Artists also sometimes have to create a few shots from scratch when circumstances conspire against you. But mocap has emerged as a key component of cost-effectively handling large quantities of data.

I see the same pattern repeating for scanning. Can you theoretically get better quality from hand sculpting a head than by performing face scans? Sure. If you have a large enough budget then you can do anything. But if you care about the quality to cost ratio then capturing a real person is much more efficient than sculpting a head from scratch. That is the philosophy behind the Filmic Worlds head scanning service.

The other issue of course is the quantity of content. Often times, someone will tell me: “That movie looked great! We should do that!!!” And my response is: “That movie had 1 head, less than 5 minutes of animation, and a small army of animators to do all the work. We have to do 20 heads with 10 hours of animation on half the budget.” It’s always inspiring to see the great work going into VFX, but video game graphics is a fundamentally different problem. We have to be much more focused on quantity.

Rashid K. Sayed: Do you believe current gen consoles are closer to mimicking the high quality CGI seen in movies but in real-time? How much longer will it be before we see games with the same graphical quality as the many CG trailers used to hype them?

John Hable: Honestly, it is hard to say. I think it will be a very long time. The current crop of consoles is much better than the previous generation, but the trailers are getting better too. If you really want to hit that level of quality then you need staggering triangle counts with really long shaders. Photoreal global illumination is very easy offline but very difficult in real-time. I don’t have a good answer for you, but my guess would be longer than you think. One more console generation seems too quick. Maybe in two more generations? That would be PS6 and the 5th gen Xbox? And if we need to render in 4k then add another generation which puts us at PS7 and 6th gen Xbox. It’ll be a while.

Of course, they will never fully line up because of the content costs. Trailers and feature-length movies simply have a much higher budget per second than what the full game can afford. This difference is more than an order of magnitude in some cases.

"For me, my major complaint these days with games is light leaking. It is too expensive to have a shadow on every light that you make in a game so you have this effect where light seems to bleed through objects. As an example, you might see a character with a bright light behind him or her."

Rashid K. Sayed: You have started your own scanning services. Given that competition is stiff, what are your plans to grow your service further?

John Hable: That’s a good question and it really depends on the customer base. My primary market is video games that want to push their facial animation quality. Everyone loves the idea of scanning but it’s not easy to put together a complete workflow for going from raw scans to a high-quality facial animation in the actual game engine. As more games embrace facial scanning my aim is to have the optimal quality to price ratio for real-time applications.

A ton of work goes into making scans usable in a game engine. The head needs to be aligned to your base topology for every shape. The scans need to be stabilized and isolated into their key movements. The symmetry of the eyes and lips often needs to be fixed which requires warping the whole head. The textures need to be aligned so that they blend together with minimal cross blending. The eyelids need to be cleaned up to maintain symmetry and align with the eyeball. It’s a major undertaking. The main selling point of the Filmic Worlds head scanning service is that all that work is done for you. The actor or actress walks in to my office and a blendshape rig magically walks out.

Rashid K. Sayed: Assuming that you have seen the E3 2014 Uncharted 4 trailer, what are your thoughts on the facial animations of Drake? Do you think that is the standard that game developers should aim for in the future or is it going to get even better than that?

John Hable: Naughty Dog is doing some very interested things with their facial animation. I’m really excited to play it! But I really can’t say anything about it until I see the results in the shipped game.

Rashid K. Sayed: You were behind the HDR Lighting tech in Uncharted 2 which by the way was phenomenal at the time. With more complex solutions such as Global Illumination and Physical based rendering being employed, where do you think lighting tech is heading next?

John Hable: For me, my major complaint these days with games is light leaking. It is too expensive to have a shadow on every light that you make in a game so you have this effect where light seems to bleed through objects. As an example, you might see a character with a bright light behind him or her. Then you will see these bright lights on the creases of his or her clothes which should not be there. That is light leaking and it drives me crazy. You also see it a lot on muzzle flashes. That is the next thing that I would like to see improved.

Global Illumination still needs some work too. In many games with Global Illumination I still feel like objects lack good contact shadows in ambient light. And the falloff often does not look natural to me. Good baked lighting in a video game is still not a solved problem.

Rashid K. Sayed: The PS3 actually had a better CPU compared to the PS4. I mean the CELL had amazing potential with its dedicated set of SPUs. It was just that it was very hard to learn and optimize games for it. Do you think the PS3 was ever utilized to its full potential?

John Hable: I’d say that the PS3 was more-or-less maxed out. On Uncharted 2 most of the code running on the SPUs was hand-optimized assembly, and at that point there is really no way to push it farther. Naughty Dog squeezed a little more water out of that rock for Uncharted 3 and The Last of Us, but there really isn’t much performance left to get. There are several other games that pushed the console about as far as it could go but I can’t talk specifics. Certainly the early Xbox One and PS4 games are doing things that would be impossible on the Xbox 360 and PS3.

"If your game is bottlenecked by the CPU, going to DirectX 12 or Vulkan will greatly help your game. But if you are bottlenecked by the GPU then the updated APIs will help little or not at all. Since many PC games are bottlenecked by the CPU, you will see massive gains there."

Rashid K. Sayed: At a time where every major development studio was struggling to make awesome looking games on the PS3, you guys at Naughty Dog were delivering visually fantastic games like Uncharted. What was the secret sauce behind that success?

John Hable: There isn’t really a magic bullet. On the graphics side, Naughty Dog has a very talented team. So I guess the secret to great graphics is you need to hire great people. That’s not much of a secret.

But if there is one unique aspect of Naughty Dog, it is their hiring. On the programming side, no matter what your resume is they give everyone the same general questions, and if you can make it through you get the job. Naughty Dog programming interviews follow a pretty uniform standard so in my opinion they do a good job of actually testing candidates based on their ability.

In the industry, we have a tendency to think that everyone who worked on a great game must be great, but it’s not true. When you interview someone with a great game on their resume, it is possible that the candidate was the superstar who made it great and you should hire him or her immediately. It is also possible that the candidate was dead weight and coasted off everyone else’s hard work. And on a terrible game there is probably at least one person who did a herculean job to get the game to actually ship. You can’t judge people solely by the games they worked on. The Naughty Dog philosophy is that everybody needs to be tested. All artists have to take an art test and all programmers have to pass programming test questions. It can come across the wrong way to people but it minimizes the bias.

So I guess my main advice about hiring would be to keep an open mind. If a candidate’s last game failed miserably, that person could have been the sane person in the asylum. Sometimes the leadership makes bad decisions which sabotage the efforts of a talented and hard-working team. My one takeaway is that you can’t judge someone by the games they worked.

Rashid K. Sayed: DirectX 12 and Vulkan provide a substantial performance boost over DirectX 11. How much will this contribute to facial scanning and animation quality in gaming for the coming years?

John Hable: The short answer is that newer APIs will make the CPU faster, but will probably not have much effect on the GPU. The improved APIs change how long it takes for you to tell the GPU what you want it to do, but they have no effect on how long it takes the GPU to actually execute those instructions. Reread that sentence if it doesn’t make sense.

If your game is bottlenecked by the CPU, going to DirectX 12 or Vulkan will greatly help your game. But if you are bottlenecked by the GPU then the updated APIs will help little or not at all. Since many PC games are bottlenecked by the CPU, you will see massive gains there. However console games are usually bottlenecked by the GPU so I would not expect significant changes from newer APIs.

The GPU is the same regardless of if you are using OpenGL, DirectX11/12, or Vulkan. Let’s say that you are drawing a cute little family of bunnies. These bunnies are made of triangles and each bunny has its own colour map, normal map, etc. With OpenGL or DirectX 11 you would have to describe each of these bunnies one at a time. The first bunny has a red colour map. The second bunny has a blue colour map. The third bunny has a green colour map. And every frame you have to tell the GPU the same thing over and over again.

DirectX12 and Vulkan are much more efficient. In the first frame, you can describe all the bunnies to the GPU. You tell the GPU that there is a red one, a blue one, and a green one. Then they can all be drawn together. With DirectX 11 and OpenGL you have to describe all the bunnies every frame but with DirectX 12 and Vulkan you can just say “draw all the bunnies”. I’m oversimplifying but that is the idea.

The key idea is that the communication between the application and the GPU is much more efficient. You can convey the same information with less overhead. But the amount of time that it takes to actually render those bunnies is the same. The GPU does not care if the commands came from DirectX 11/12, OpenGL or Vulkan. It takes the same amount of time to actually draw those triangles. But the communication (which takes CPU time) is much faster.

So if your game is bottlenecked by the overhead of telling the GPU what you want to do, then the APIs are a huge help. This situation happens a lot on PC games where you have a powerful card sitting idle because it can draw things faster than it can be told to draw them. But console games tend to keep the GPU working hard so there are less gains to be made.

"As you know, one of the big problems with VR gaming is that it requires massively more GPU power than a standard game. Let's pretend that you have a typical game running 1080p at 30 fps. To run in VR, you need to run a minimum of 60 fps and possibly up to 120 fps."

Although the GPU might make some gains along the way. Ideally you would render objects from front to back to minimize the amount of overdraw in a scene. Unfortunately that would have too many state changes and it would take DirectX 11 and OpenGL too long to translate those commands to the GPU. Many games are intentionally making “bad” decisions for the GPU to help out the driver on the CPU.

For example, let’s say that your bunnies have multiplied and now you have 5 red bunnies, 5 blue bunnies, and 5 green bunnies. For the GPU, the ideal way to render the bunnies is front to back to minimize overdraw. But the cost of telling the driver to do that would be too slow with DirectX 11 and OpenGL. Telling the GPU to render red, blue, blue, green, red, blue, etc. would have too many state changes.

So you have to render all the red bunnies together, followed by all the blue and green bunnies. With DirectX 12 and Vulkan, the overhead is low enough that we can render the bunnies in the optimal front to back order. Of course I’m oversimplifying and there are other considerations but the point is the same.

Rashid K. Sayed: PC games are already surpassing 8-12GB as recommended requirements. How do you think a. the new consoles will cope with this and b. do you think the RAM in PS4 being GDDR5 will make it outdated later than say the DDR3 in Xbox One?

John Hable: In general, I would not worry about the consoles becoming outdated. Remember, the PS3 and Xbox 360 have only 512MB of RAM, but the PC versions of the same games usually require 2GB or more. On consoles you have more control over memory allocations. The 8GB of RAM in both the Xbox One and PS4 should be plenty for the remainder of this console generation.

Rashid K. Sayed: On another note, PC gaming has seen significant leaps and bounds over the current generation of consoles in the past year, especially with Nvidia constantly introducing newer graphics cards. What do you believe is the next step for PC gaming hardware in the coming years?

John Hable: On the PC side, the main thing that I want is more power. The GPU does most of the things that I want it to do and DirectX 12 and Vulkan should solve our driver issues. The most interesting thing for me is VR and my dark horse feature is Foveated Rendering.

As you know, one of the big problems with VR gaming is that it requires massively more GPU power than a standard game. Let’s pretend that you have a typical game running 1080p at 30 fps. To run in VR, you need to run a minimum of 60 fps and possibly up to 120 fps. You need about 1.5x as much resolution in each dimension due to distortion. And you need to render the scene twice because you have two eyes. The GPU needs to push through a staggering number of pixels.

The idea behind foveated rendering is that the human eye only has full resolution in a very small field of view (FOV). While our FOV is about 100 degrees, we can only see at full resolution in a tiny 2 degree circle. If you have really good eye tracking you can figure out which 2 degree circle on the screen that the user is focused on. Then you render at full resolution in that tiny area and much lower resolution everywhere else. In theory it can save an enormous amount of rendering time.

Tracking your eyes with this level of accuracy is really hard but it can be done. There was an interesting Microsoft Research paper which showed that foveated rendering has the potential to reduce the pixel count by 10x to 15x on a display, although in practice the gain was 5x. While VR requires 5x to 10x more pixels than normal rendering, foveated rendering in theory reduces the pixel count by the same amount. In other words, VR would require rendering the same number of pixels as a typical screen. That would be exciting. Foveated rendering is not easy, but it has to be easier than making everyone’s GPU 5x faster!

But it gets even better because the FOV in VR is much wider. Typical VR systems have a FOV of around 100 degrees whereas the FOV of your physical TV or monitor is much smaller. As an example, 42” TV 8 feet away has about a 21 degree FOV. That 2 degree circle is a much smaller percentage of the display in VR than it is on a desktop monitor or TV. That makes the gains more significant. According to that Microsoft Research paper, at a 70 degree FOV with very high resolution you could theoretically get a 100x speedup. Getting a 100x speedup in practice is pretty farfetched but even a 10x speedup would be huge. That’s the equivalent of skipping a console generation!

Foveated rendering has never really caught on, but there are several other reasons why foveated rendering might be practical for VR. First, eye tracking is so much easier because you can mount the camera and IR projector right inside. Second, foveated rendering is not practical on a TV if you want more than one person at a time looking at it. That problem solves itself for VR. Third, there are other compelling uses for eye tracking in VR headsets so it’s reasonable that a large number of consumer VR headsets will have eye tracking before foveated rendering takes off. There is a potential solution to that chicken and egg problem. I have trouble imagining a world where millions of people have accurate eye tracking on their desktop PCs but it could realistically happen with VR.

The performance gain is potentially huge and I’m cautiously optimistic that foveated rendering will become commonplace in VR down the road. In fact, given how wide the FOV is in VR, it is plausible that VR rendering could even be faster than rendering to a standard display by a significant margin. I think most people in the VR world are severely underestimating the potential of foveated rendering.

"In the film world there is very little real reason to create a full facial rig of a real person who exists today. Benjamin Button proved to me that you can create a photoreal digital actor but it is a very difficult and expensive process. You only create a photoreal digi double if there is some reason why you can't film the actual person."

Rashid K. Sayed: What are your thoughts on VR gaming and how will realistic facial animation and scanning ultimately help in the immersion process?

John Hable: VR gaming is really cool! It’s something that I’m actively looking at. In my (admittedly biased) opinion, realistic facial animation is essential to creating immersive experiences in VR. Most demos that I have seen are shying away from photoreal CG characters, either by using cartoony CG characters or cheats with video. Using video to create characters is much easier than full CG because all you have to do is put a camera in front of an actor. But in my opinion you can’t achieve full presence with it.

I agree with Mel Slater’s philosophy that to achieve presence you need to achieve “Plausibility”. The idea is similar to agency. You need to believe that your actions can affect the world around you in some way. The obvious problem with video is that you can’t interact with it. Even with depth reconstruction to give you proper parallax, you will still subconsciously know that you are watching an unchangeable video. Video with depth reconstruction can achieve “Place Illusion”, but it will never achieve “Plausibility”. In other words, you know that it is not plausible that your actions could affect the environment/people around you. Video is still the best solution if doing a full CG character is impossible due to technical or budget limitations. But to achieve presence and really feel like you are in a room with someone else then we need to make true 3d characters.

On a more holistic note you have probably heard something along the lines of “Creating realistic CG faces is the holy grail of film”. It’s not true.

In the film world there is very little real reason to create a full facial rig of a real person who exists today. Benjamin Button proved to me that you can create a photoreal digital actor but it is a very difficult and expensive process. You only create a photoreal digi double if there is some reason why you can’t film the actual person. For example you need digi doubles for impossible stunts (Matrix Reloaded/Revolutions, and many other films), making someone drastically younger or older (Benjamin Button and Clu), or if the talent is deceased (Orville Reddenbacher, Tupac hologram, Bruce Lee, and Paul Walker). But you would never replace a complete actor with a CG version just because you can. It is much easier and cheaper to film the talent on set.

But VR changes everything. The TV show “Game of Thrones” has no reason to create digi doubles of its cast. However the excellent “Game of Thrones” episodic game from Telltale Games needs to create digi doubles because it is, you know, a video game.

In VR we need to create full facial rigs of real, living actors and actresses, which is not the case for movies and television. As it stands, we only create full facial rigs for the tiny fraction of actors and actresses who need digi doubles in blockbuster movies. If VR storytelling takes off then do we suddenly need to scan thousands and thousands of actors and actresses? I’m not sure if that will happen, but if it does then we need to really automate this process! It’s a daunting challenge but someone has to solve it. Creating CG faces that solve the uncanny valley is not the “holy grail” of movies. But is it the holy grail of VR? I honestly don’t know, but I’m very interested to find out.

Rashid K. Sayed: Much was made about cloud technology and how it would help improve graphical performance but that’s seemingly been lost in the ether. How long will it be till we begin to see real tangible effects of improved graphics on consoles thanks to the cloud?

John Hable: I can’t comment on any specific applications or timelines. But I can give you an overview of the challenges involved. In short, it is really, really hard. The idea is certainly tempting. We have all these servers in the cloud. Intuitively it makes sense that we should offload some of our work to these servers but the devil is in the details.

The main problem is latency. How long does it take to go from your computer to the cloud and back? It takes time to send data up to the cloud, let the cloud crunch some numbers and send the data back. In video games we generally need the results of computations right away. The roundtrip time of sending a megabyte up and a megabyte down can easily be several seconds even if you have a broadband connection.

Then there are all the things that could go wrong. Wi-Fi signals can have spikes. Packets get lost. How do you recover if the server has a hardware failure? What if a person on your Wi-Fi network starts downloading a big file? What do you do for people with slow internet connections? And of course, how much do those servers cost to use? Just because a computer is in the cloud doesn’t make it free! It’s a very difficult problem. Not impossible, but very difficult.

What graphics features are in a typical game where you could live with getting the results a few seconds after starting the calculation? Not many. I think that a compelling cloud rendering technique would have to be an amazing new feature that no one has really done before. If we think outside the box there might be some really cool things that we could do. But simply offloading existing work into the cloud is hard to justify because of the roundtrip latency and all the things that can go wrong on a network.

Rashid K. Sayed: What are your thoughts on the recent GDC demo for Unreal Engine 4? From your experience, how possible is it for a game to pull off that level of facial animation in real time on UE4?

John Hable: You mean the Unreal 4 Kite demo, right? Unreal has been putting out great demos (and games!) for a long time and the Kite demo is no exception. My understanding is that all features used for the boy are practical in a real game. It was a very ambitious demo and they really pulled it off!