Well, what’s in a gaming processor? A lot, actually. With the consoles moving more toward a GPU-centered design, and the new Pascal and Polaris cards taking up a lot (though justifiably so) of headline space, the processor, the heart of any console or PC gaming rig (or graphing calculator, for that matter), isn’t quite getting the attention it deserves.

True, a 2500K might lack the Transformerness of a POINTY METAL THING WITH SPINNING FANS AND LIGHTS (something I’m yet to fully comprehend; I try to cover up any latent LEDs lights because they’re a glaring eyesore when you’re actually trying to game). But that doesn’t make a good processor any less central to your gaming experience. Let’s not forget that up until 1996 and Quake, games used software renderers running off your trusty 386. It was the dramatic increase in visual fidelity around the mid-90s with the move to full-3D environments that necessitated specialized graphics hardware. Graphics rendering shifted to GPUs, but other aspects, notably AI simulation, physics calculation, audio, and streaming, remained CPU-centric domains.

With the relative imbalance of CPU/GPU power in the eighth-gen consoles, developers have, however, have been leaning more heavily on the GPU, with GPGPU (General Purpose General GPU) solutions able to take on physics (and even audio) tasks that might choke on the relatively weak console CPUs. In the PC space, however, powerful CPUs with fewer cores are still very much relevant. Although the possibilities for GPGPU on PC are tremendous, in practice, GPGPU on PC nets you little more than pretty hair, little specks of debris here and there, and some more pretty hair. For the foreseeable future, you’re still going to need that trusty processor of yours to make sure NPCs walk and talk right, gunshot sounds come from the right direction, and that arrows reliably hit knees. With that in mind, let’s give processors their due and learn a thing or two about them.

Right from the eighth-grade basics: A processor is the “brain” of a computer system. It’s what actually executes input code, as well as allowing the other parts of the system to interact with each other. A CPU core is a single, discrete processing unit. In ye olden days, (right up until the Pentium 4), CPUs were single-core and single-threaded. A thread is a sequence of instructions for a particular task, so a single-core, single-threaded processor is only to handle one thread at a time. Of course real-life computer usage involves a lot of multitasking (like looking up boss guides while playing Dark Souls 3).

Through some clever process queuing and scheduling, operating systems like Windows give us the impression that we’re multitasking, when really, the processor’s shuffling between tasks very very quickly. Of course that impression can only be kept up so far, and the more tasks you pile on, the shuffling that has to be done, and the less responsive your system’s going to be. Try running Folding@Home on a Pentium 3, with 57 different YouTube videos open. Yeah, just kidding. A single core single-threaded system is going to choke hard if you run more than a few things at a time. What’s the solution? You can either increase the number of physical cores, increase the number of threads each core can handle, or both.

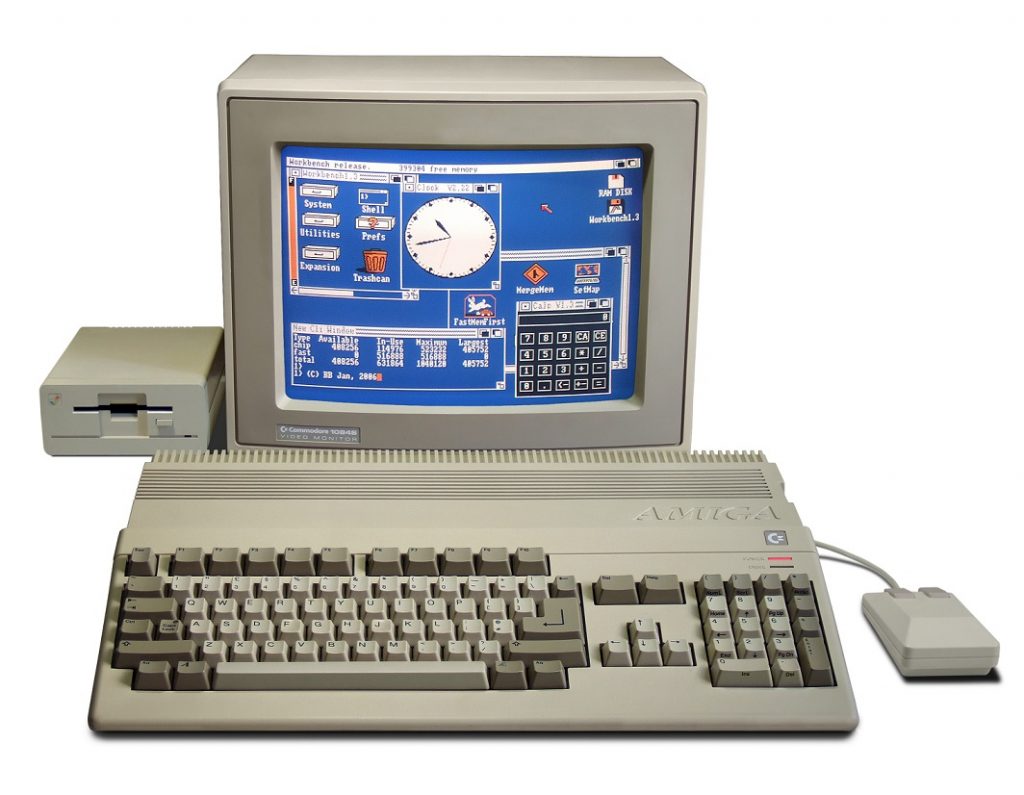

Multi-threading takes advantage of the fact not every thread needs to use all of a CPUs processing power at any given point of time. Imagine if your processor maxed out if all you did was move your mouse cursor. It probably doesn’t, so there’s extra headroom there to get other tasks done. Earlier, we talked about queues and schedulers—how operating systems switch between tasks really quickly to give you the impression of multitasking. Clearly, there’s multithreading going on there, because each (mouse cursor) thread (Spotify) gets (cat videos) it’s slice of time. See what we did there? Operating systems implemented multithreading at a software level as far back as Amiga OS in the mid-1980s. Even a 7 MHz Motorola 68000 could do it.

What’s this hyperthreading business, then? Hyperthreading is Intel’s hardware-level multithreading solution, which debuted with Pentium 4 back in 2003. To an operating system, a hyper-threaded processor core looks like two cores, and it’s treated as such, which lets multithreaded code run more efficiently than with just a single logical core.

Why does this matter in terms of gaming? Most consoles (apart from the PS4 and Xbox One, which have highly PC-like hardware), run specialized, custom hardware. Because game code lends itself naturally to multithreading (you’ve got lots of highly similar tasks that need to be executed at the same time), console hardware itself has historically been a lot wider than PC. Take the Playstation 3, for example.

The Playstation 3’s Cell Processor features features one PPE (Power Processor Element), which is broadly comparable to a single x86 processor core, and six SPEs (Synergistic Processor Elements), which are very good at handling SIMD instructions (Single Instruction Multiple Data), essentially of the type where you perform the same function over and over again on different data, particularly useful for game code. This is accompanied by the RSX GPU, which is essentially a cut-down Nvidia 7800 GTX. The RSX is relatively weak, meaning that first and second party PS3 devs like Naughty Dog shunted much of the graphics work onto the PPEs. This wide design explains why PS3 exclusives tend to look better than most Xbox 360 titles (or PC games running off a 7800 GTX), while multiplats—especially those running on engines that favour fewer cores (like Skyrim)—tend to run into performance issues.

As late as 2010, dual-core systems were standard in the PC space. Moreover, because console hardware in previous generations was so different from PC hardware, it was not feasible to carry over console-specific optimizations to PC—what works well on the PS3’s Cell Processor is not likely to work at all on a Core 2 Duo. Because of these two reasons, multiplats on PC weren’t highly multithreaded—they favoured fewer, stronger cores. However, this changed drastically with the arrival of the PS4 and Xbox One. Both used eight, weak Jaguar cores. Because they shared a common x86 architecture, optimizations on PC could carry over to console, and games on PC started utilizing more cores and more threads.

Hyperthreading is crucial is scenario, especially if you’re at the budget end of things: Both a Core i3 and a Pentium are dual-core parts. However, hyperthreading on the i3 allows it to handle twice as many threads. As developers code wider, modern games can use as many threads and cores as they can get. While a Pentium may stutter and choke in CPU-intensive situations, an i3 will often be able to pull through without dragging framerates into unplayable territory. This doesn’t mean that you’ll want to run out there and buy an octa-core processor for your gaming rig. Coding paradigms have as much to do with people and their habits as with hardware. Old habits die hard and few engines, apart from DICE’s Frostbite Engine really scale well with more than four cores. If you want to get the best gaming performance, you still need relatively fewer, stronger cores, and that means higher clockspeeds and higher IPC.

What’s clockspeed, then? It’s the speed at which processor executes instructions. And it’s measured in Hertz (Hz) for a reason. Go back to high school physics. Remember wave frequency? Yeah, this is the same thing. CPU clockspeed is determined by the frequency (cycles per second, in Hz) of a crystal oscillator on the motherboard. Older CPU architectures could only execute one instruction per cycle. That’s still a lot—3.2 billion instructions per second at, say, 3.2 GHz. But modern architectures are superscalar, meaning that they can execute more than one instruction. IPC is short for instructions per cycle, and it’s a measure of how much a processor can get done in one cycle. A modern game requires a processor to perform billions of operations every second. Just let that sink in for a moment.

More efficient architectures feature higher IPC, meaning that the processor can get more done, even if its running at a lower clockspeed. Apple’s CPU designs are very efficient with a high IPC, which is why a dual-core A8 processor, clocked at a mere 1.4 GHz, can hold its own against a quad-core Snapdragon 801 at 2.5 GHz. This is very important because of an annoying thing called the First Law of Thermodynamics. The higher your clockspeeds, the more power your processor consumes, and the more heat it generates. This, of course, is what happens when you overclock a processor, which is essentially running the processor at a higher clockrate than it’s set at in the factory. Every individual piece of electronics (or heck, pretty much anything made in a factory, even your clothes), varies slightly in its tolerances. It might be close, but your FX-8320 isn’t going to have the same tolerance to heat and voltage as mine.

There can be significant variation between individual pieces, so it’s important to set a minimum threshold at which you can keep and sell a reasonable number of pieces. The rest aren’t always tossed in the dumpster, though. Sometimes, they’re sold to you for cheaper! You can read about that process (called binning) over here. But what that means is that your processor (as in specifically yours), maybe able to tolerate much higher temps and voltages, and it may be able to run higher than advertised frequencies. Because manufacturers have had decades to get their acts together, that maybe is more accurately a most likely.

Overclocking itself is something of an art, and with the right cooler (and the right processor, and right motherboard), you can potentially eke out hundreds of dollars’ worth of free performance. With games these days still favouring fewer, stronger cores an overclock can give you a meaningful performance boost in CPU-bound games, even it’s a 6700K that you’re overclocking. Of course, you’re going to have to watch out for your temperatures. Investing in an aftermarket cooler is the way to go, but with the way prices are stacked, you might as well put that money towards a better processor.

Unfortunately, CPU limitations aren’t only a problem in games that feature CPU-intensive AI and physics. This has to do with the relationship between the CPU and the GPU. GPUs are (obviously) great for gaming. But applications in a standard operating system can’t interface directly with the GPU. An Application-Programming Interface sits between the application and your graphics card. The API doesn’t actually talk to your graphics card. It talks to your processor, which then talks to your graphics card by issuing draw calls. APIs like DirectX 11 and OpenGL 4.5 suffer from a relatively high amount of CPU-overhead, meaning that a lot of the CPU’s time is spent conveying instructions to the GPU. New “low-level” APIs like Vulkan and DirectX 12 are able to interface with the GPU at a lower level of abstraction, which eliminates much of the CPU overhead. Low-level APIs won’t increase your GPU performance per se, but getting rid of the fluff between can eliminate CPU-side bottlenecking.

CPU bottlenecks are a big deal on console, something the atrocious performance of Assassin’s Creed: Unity can attest to. For better or for worse, console specs are what determine the technical quality of games in any given generation. This generation, relatively fast GPUs were paired with anaemic CPUs. The situation’s little different with the mid-cycle refreshes: despite a 33 percent CPU clockspeed hike, the PS4 Neo’s processor is still considerably weaker than a Haswell i3 in modern games. What does this mean for games this generation? The drastic increase to GPU power—especially so with the Xbox Scorpio—gives developers the headroom to substantially enhance visuals in relatively static environments. That’s the catch, right there.

RX 480 levels of GPU performance, paired with the low-level hardware access on consoles means that poly-counts and lighting can be drastically ramped up. If developers target 1080p/30 FPS, CGI quality visuals can’t be ruled out, especially on the Xbox Scorpio. Whether Microsofts’ and Sony’s policies will allow developers to make exclusives for the new platforms remains to be seen, however. However, CPU-side bottlenecking (and spiraling development costs), will likely mean that photorealistic Scorpio and Neo games will lack depth. In terms of interactivity, they’ll be much like eighth and seventh-gen games. Take The Witcher 3, for example. There’s no denying the sheer scale of CD Projekt Red’s latest, or its stunning visuals.

But the basic ways in which you interact with the game—the game behind the game—remain more or less unchanged from The Witcher 2. You still control a somewhat jankily-animated character in third person and traverse a more or less static environment, and enter buildings with doors that seem to have no purpose apart from culling the exterior from the frame as quickly as possible. Only some of the NPCs have (admittedly handcrafted) dialogue options, and everyone seems to be milling around aimlessly. Oh, and, the trees won’t burn, no matter how much you spam Igni.

What about procedurally generated kinematic, so that every NPC can have unique animations? What about using our experience with chat-bots and machine learning to develop unique and truly dynamic NPC conversations, which are never the same, twice? Gaming worlds, as they are right now, are so easy to break with everyday logic, so fragile. And they’ll remain that way until meaningful CPU gains will allow developers to add depth, wholeness, to their worlds through simulation-based systems. Until then, it’s still going to be “go there, fetch that.”