The industry has been full of professionals who talked to us about the present state of gaming and what’s to come in 2016. Recently, GamingBolt had a chance to hear another expert’s views on the innovations and technologies to come – Celtoys owner Don Williamson, who not only worked on the renderer for Splint Cell: Conviction but was also an engine lead for the Fable engine. As such, Williamson has extensive experience with engine and pipeline optimization having worked on a number of different games in the past. What is his take on the current and emerging challenges of technologies like DirectX 12 and Cloud computing? Let’s find out.

"I started off extending the Splinter Cell engine and ended up writing a new one from scratch to work on Xbox 360. I got something like 45fps on a high-stress level where the old one was hovering around 3fps."

Could you tell us a bit about yourself and what you do?

I’m an engine and pipeline developer of some 20 years now. I sold my first game as shareware at the age of 16, creating many games and engines beyond then as Programmer, Lead Programmer, R&D Head and Project Lead. I created the Splinter Cell: Conviction engine, small parts of which are still in use today, lead the Fable engine and co-created Fable Heroes, bending the Xbox 360 in some very unusual ways! I now run my own little company, building a crazy game and helping other developers make theirs better.

You have a great pedigree in graphics programming, having worked at Lionhead and Ubisoft. What was the inspiration behind starting Celtoys, your own?

I wanted to create my own game and explore technology ideas that nobody in their right mind would pay me to explore. Getting to spread my wings and go back to helping other developers improve their games was an exciting way to help fund this.

What can you tell us about the work you did at Ubisoft and Lionhead? What were your day to day activities like and what were your biggest challenges during those years?

My roles at Ubisoft and Lionhead were very different. I’d spent some 4 years as a project lead before joining Ubisoft and wanted to break away and dedicate as much time as possible to pure code; it’s what I loved most.

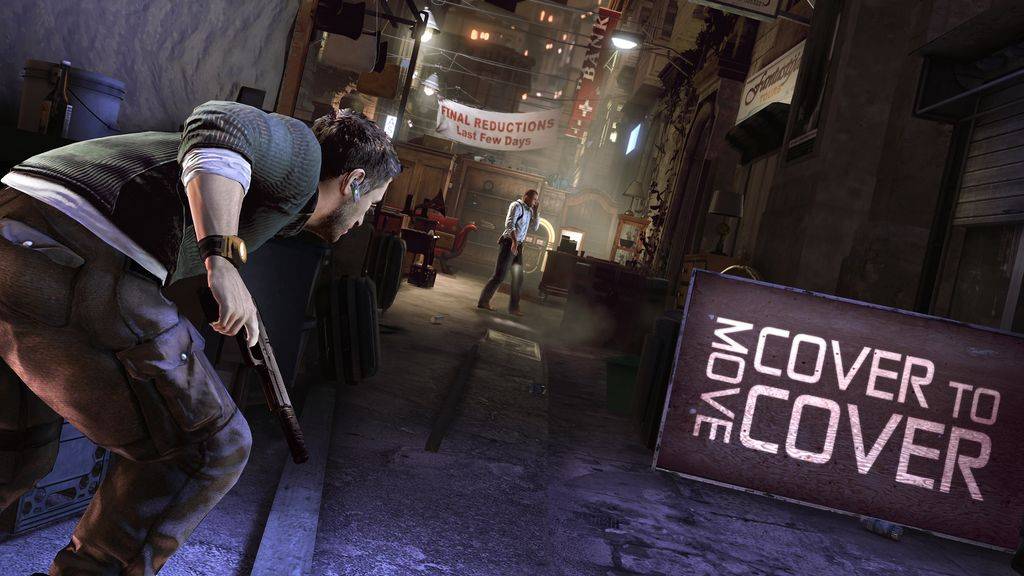

I started off extending the Splinter Cell engine and ended up writing a new one from scratch to work on Xbox 360. I got something like 45fps on a high-stress level where the old one was hovering around 3fps. You could also iterate on code within a couple of seconds, as opposed to 4 or 5 minutes, so it was given the green light. Myself and Stephen Hill then spent 8 months porting features over, improving it, collaborating with technical artists and integrating with UE 2.5 (aka “making it work”). It’s, without doubt, the best engine of my career, although not without its faults. Such great memories!

Fable 2 was different. I came on-board about a year and a half before we shipped and directed the performance efforts, using help from Microsoft internally and external contractors. We had some amazing talent making the game faster so we got from sub-1fps on Xbox to a consistent 30fps, all while content creators were making the game bigger and bigger.

Fable 3 was different again as I was given 18 months to improve the engine and build a team for the Fables beyond. This was 50/50 management/code and by then I knew the engine enough to bend it to my needs. There was a lot of scheduling, Art/LD/Game co-ordination, career planning and feature evaluation, along with the usual deep-dive into code.

Although I didn’t realize at the time, my biggest challenge was swapping out the old renderer with the new one for SC5, while keeping all content authors productive. We were under intense scrutiny because it was such a risky undertaking, but we managed to do it while some 60 people used the engine/editor on a daily basis.

"Since starting the company I’ve built my own engine. I’ve had many licensing enquiries about that as it is sufficiently different to what’s currently available, but you can safely say that the amount of work required to build an engine rises exponentially with the number of clients."

Speaking about Fable 2 and 3, we read that a large amount of your code was used for the engine. Given the middle-of-the-road acclaim the franchise has received, what did you feel was the most distinguishing features of Fable’s engine? Though Fable Legends is fairly different from what Lionhead has done in the past, what excites you the most about its direction?

There were so many talented people that helped with the engine it’s impossible to list all its distinguishing features without leaving lots out.

Visually and in motion it was quite beautiful. A large part of this was down to the work of Mark Zarb-Adami (now at Media Molecule) who did all the tree animation and weather effects. His work combined effectively with Francesco Carucci’s post-processing, who had a great eye for colour.

We had 3 amazing low-level engine programmers in Martin Bell, Kaspar Daugaard and David Bryson who ensured stability, designed a very sane overall architecture and added many features like character customization/damage, texture streaming and fur.

Later on the engine team acquired new blood with Patrick Connor and Paul New, who improved the water, created some incredible environment customization tools and built a new offline lighting engine to accelerate the work of our artists.

Some interesting distinguishing features include:

At the time I think Gears of War had something like 3 or 4 textures per character on-screen. Due to the way we randomized and allowed customization of our characters, some would have as many 90 textures. Getting this to work at performance for over 50 characters on-screen was no small undertaking.

By the time Fable 3 shipped, we could have up to 25,000 procedurally animating trees in view on Xbox 360. Unfortunately some of the most impressive levels we had to showcase this were cut down slightly before some of the bigger optimizations came online. Still, I wasn’t aware of any game that could get close to that at the time or since, while having an entire city and people rendering in the background.

We were one of the first games to take temporal anti-aliasing seriously and make it our primary filtering method. It looked better than MSAA, recovered more detail, required less memory, gained 3ms performance, and reduced latency and overall engine complexity. It was a massive win everywhere, although suffered from ghosting that haunted us to the very end. We had it mostly solved but weren’t allowed to check the final fixes in due to some minor problems they introduced elsewhere. Needless to say, we had a few hundred ideas on how to make it much better for Fables beyond.

There was also our offline light map baker. Fable 2 took up to 7 days to light the vertices of a single level, causing massive trouble during the final months. On Fable 3 we created a new GPU Global Illumination light baker that baked 100x as much data to textures, at 100x the performance, getting similar levels from 7 days down to a few minutes. By the time Fable 3 shipped, levels got much bigger so they ended up taking around an hour. That’s when we added GPU network distribution to the engine, bringing compile times down to around 5 minutes. It was orders of magnitude faster than any of the commercial options available at the time.

What interests me about Fable Legends is that it’s a completely new team and I’m excited to see what new directions they take the game in.

Celtoys deals with improving performance of games, basically optimizing engines and the background framework. However was there every a thought of making your own engine and use it for licensing purposes? Furthermore, what are your thoughts on the various challenges in developing a custom engine?

Since starting the company I’ve built my own engine. I’ve had many licensing enquiries about that as it is sufficiently different to what’s currently available, but you can safely say that the amount of work required to build an engine rises exponentially with the number of clients. At the moment you can still beat established engines for performance, visuals and iteration time if you lock your feature set to one game only. It won’t be long before this also will be impossible so I’m enjoying it while I can.

This also gives me the benefit of being able to come on-site to existing devs and use the tools I’ve developed these last few years to immediately be effective.

Developing a new engine is a huge topic as its potential clients can vary from tiny teams to massive teams; from those with money to those watching every penny; and from those who want to innovate on gameplay vs. engine features.

One broad observation relevant today is that if you don’t have expertise in building one, right from the low-level programmer to the high-level manager, you’re in trouble before you start. You used to be able to bungle your way through, making lots of mistakes and still innovating, but that time is long gone.

However, one thing I can’t stress enough is that building new game engines is easy. Easy, that is, compared with building the tools required to put the power in the hands of content authors. These are the guys who will sell it and break it, demonstrating to the world what it’s capable of and forcing you to improve the feature set in the process. If you don’t make their lives easy, you will languish. I don’t believe I have seen any custom engine pipeline get this even close to right.

"What’s interesting is that even the simplest of techniques, like basic shadow mapping and a single light with ambient background, can look very convincing when viewed in stereo. Aliasing is massively distracting and there is no doubt that resolutions need to increase and the quality of the pixel needs to improve."

Can you please give us a few examples where Celtoys has helped game developers optimize the game’s performance?

The first example is Fable 2; I was contracting for a while before Lionhead convinced me to come aboard full time.

After Fable 3, I helped Creative Assembly/SEGA with Total War: Rome 2 optimize performance across many PCs. A great example is the terrain system where within 3 months we had 100x performance with 10x the draw distance, blending hundreds of thousands of procedural/streamed terrain layers in real-time. I’ve been told the basic renderer is pretty much untouched for the games beyond Rome 2, such as Total War: Warhammer, being extended by the engine team to increase resolution in the near field.

Most of our current work is currently under NDA and I’d really love to shout about some of it but it’ll be at least a year before that. However as a result we have submitted some UE4 modifications that could potentially help all games that use the engine in a big way. The game I was working on had shader instruction count go from ~1800 to ~450 in one particular complicated effect.

One thing we’ve been curious about for a while is how engine pipelines and rendering work with regards to virtual reality titles. Is there any fundamental difference between a VR game’s pipelines and a traditional game’s? What do you believe are some of the hurdles that VR games will face when it comes to graphics?

Latency is everything with VR and this makes it really exciting for low-level engine programming. In the past there has been debate about whether players can feel the difference between 30fps and 60fps, despite the real problem being latency, not fps. This time round there is no denying that if you try to skimp on latency with VR, you are not going to attract the audience – they’ll all be too busy feeling sick and trying to regain the ability to stand up.

What’s interesting is that even the simplest of techniques, like basic shadow mapping and a single light with ambient background, can look very convincing when viewed in stereo. Aliasing is massively distracting and there is no doubt that resolutions need to increase and the quality of the pixel needs to improve.

So there is growing literature on how you can identify results that can be shared between each eye: e.g. how can you do approximate visibility rather than running the query twice? All-in-all, we need an almost 4x increase in engine performance and a simpler environment to render.

Given your experience in the industry, Celtoys’ PC project sounds interesting, especially since it has a “scope never seen before.” Could you tell us more about it or enlighten us to the overall goals you currently have for it?

I’m sitting on the reveal for now but I’ve been dropping hints on my Twitter feed for a while. I’m one man who’s also feeding his family by contracting for others so I need to be sure I’m in a stable position to support the games players and manage expectations when I bring it out of hiding.

The gameplay loop is small and simple with many chances for emergent behaviour. However, the scope of the playing field is beyond anything that’s been seen before so I’m hoping to keep the punch line under cover until I can take advantage of it.

"Modern-day consoles now come with all the complexity of a PC, their own operating systems that pale in comparison and seem to be scaring the consumer away for a not-insignificant cash price."

We’re seeing more games these days reliably scaling towards lower-end PC hardware. Some examples include Metal Gear Solid 5: The Phantom Pain, Mad Max, etc. Given that the common complaint for PC development is optimizing for a wide range of hardware, how do you feel development is changing these days to accommodate a wider variety of configurations?

I think it’s market driven and potentially a cause for concern for smaller companies. 15 years ago I was optimizing for and creating fallback paths for games that had to run on 50 or so distinct video cards with several different APIs. It was quite literally: video card A has a z-buffer, video card B doesn’t! We had extensive publisher-driven test regimes and they rarely let us off the hook with any bugs.

With the rise of mass-market console gaming and the odd belief that the PC was doomed, games became merely last-minute PC ports; not helped by the increase in software complexity required to build them.

It used to be consoles provided a consumer-oriented benefit to playing games: buy the game, stick it in the slot, play immediately! The PC at the time was wallowing with hardware, software and installation problems and wasn’t as convenient. Modern-day consoles now come with all the complexity of a PC, their own operating systems that pale in comparison and seem to be scaring the consumer away for a not-insignificant cash price.

On top of that you have mobile markets being saturated and the rise of freely-available commercial quality engines that everybody uses. It seems that the next big market is PC and everybody is chasing it, to the point where we might get saturation again.

At the moment I think you need to get in and get out while you can because there’s not long left.

Similarly with Unreal Engine 4, it feels like it will take a while before we see games on PS4 and Xbox One whose visual quality approaches that of Infiltrator demo. When do you feel we’ll see visuals from the engine which can rival big-budget CGI in movies?

Not for a while. Engine choice not-withstanding, we have many years before we’ll catch CGI, if at all. One look down the rabbit-hole of aliasing with an understanding of why movies look so much better at low resolutions than games at higher resolutions will show a little insight.

In terms of UE4, it’s massively flexible and gives power to many content authors who have been restricted in the past. While you will see much amazing creative output with limited playing fields in the next couple of years, a lot of that flexibility is being used to simply speed up game creation, rather than add more visual fidelity.

The Xbox One and PS4 have now been on the market for two years and there are still more graphically impressive games releasing. What can you tell us about optimizing for the Xbox One and PS4 and do you feel the consoles will outlast the relatively short shelf-life of their components?

These are powerful machines with fixed architectures and some astonishing debug tools. On one of the games I worked on we created some amazing effects for Xbox One that have given us ideas for how it could be visually much better and more performant. The main variable in all this is how many skilled people you have and how much you’re willing to spend.

These days the investment in great engine tech is no longer necessarily rewarding you with better sales. You have less developers going with their own solutions, instead investing money in programmers for the core game and those who can maintain a bought 3rd-party engine.

You have some established pioneers like Naughty Dog thankfully making leaps and bounds in this area, but I’m unsure right now whether we’ll see as much progress as we did with the previous generation.

"I’m entirely convinced that some of the routes we are investing money in right now will prove to be financially and environmentally unable to scale. However, the cloud is here to stay and we will fail many times before we find ways to exploit it in the long run."

There’s been lots of footage surrounding DirectX 12, which recently released for PCs alongside Windows 10, and how it’s possible to achieve a new standard for graphics with it. However, it still feels a ways off despite the strong popularity of Windows 10. What do you feel are some of the factors that will determine whether we see more DirectX 12-geared games in the coming years?

Nobody really cared about DirectX 11 because there was assumed to be no money building your engine for it. With Xbox 360, you already had a lot of the features of DX11 and more, with lower level access and a DX9-like API. Porting this to DX9 for PC was simple enough, with the lion’s share of work going into compatibility and performance – adding a new API was just a recipe for trouble.

The Xbox One and PC seem to have more financial impact on sales than the previous generation and if Microsoft manage the Xbox One DirectX 12 API effectively, the up-take should be good. It’s more complicated to develop for than DirectX 11 but more and more people are using engines like UE4 and Unity so the impact should be lessened.

DirectX 12 will be heading to the Xbox One and there is the belief that it can be used for better looking graphics. What are your thoughts on this and how could DirectX 12 be used to benefit the console hardware overall?

The innovation in DirectX 12 is its ability to unify a large number GPUs with a single, non-intrusive API; it’s such a great move from Microsoft. I think the Xbox One software team will already have made their version of DirectX 11 very close to the metal so the main benefit will be from an engineering point of view, where you can realistically drop your DirectX 11 version altogether. The simpler platform will allow more time to be invested in optimizing the game.

What are your thoughts on the PS4 API? Do you think it will hold on its own when DX12 launches?

I have no experience with the PS4 API but based on many factors it should at least hold its own. The PS4 is a fixed console platform and as far back as the original PlayStation, Sony has been writing the rule book on how the most efficient console APIs should be implemented.

Another area of interest these days is cloud computing, especially given Microsoft’s demonstration of its capabilities with Crackdown 3. Several other areas of gaming like PlayStation Now and Frontier’s Elite Dangerous use cloud computing in their own right. However, what do you think about it being used to fuel graphics and newer scenarios in the coming years? Will there be more reliance on the cloud and less on the actual hardware of the console?

I’m entirely convinced that some of the routes we are investing money in right now will prove to be financially and environmentally unable to scale. However, the cloud is here to stay and we will fail many times before we find ways to exploit it in the long run.

If we break the typical game engine pipeline into pieces there are many parts that offer unique opportunities for distribution. Imagine the creation of this new cloud infrastructure how you would the evolution of 3D engines written in software, to their modern-day GPU accelerated counterparts: we’re trying to create a big GPU in the sky.

Even if players aren’t part of the same session, they’ll be exploring the same worlds. Work such as global illumination, visibility, spatial reasoning and complex geometry optimization can be factored into their low frequency contributors, clustered, computed and cached on machines thousands of miles away, to be shared between many players. Whatever client hardware is used to retrieve this data will augment that in ways that don’t make sense to distribute.

Of course the other element to cloud rendering is the ability to write an engine once for the target platform that can be distributed live to many varying devices on your wrist, in your pocket or on a screen in your lounge. We already have examples of this working really well on your local network (e.g. the Wii-U) but I think there’s a long way to go before the issues of latency and cost are solved.

"The life of an engine developer is constantly mired in compromise that the average player will never see. Particles can be rendered at a lower resolution, volumes can be traced with less steps, calculations can be blended over multiple frames and textures can be streamed at a lower resolution."

Do you think the emergence of cloud based graphics processing as in case of Microsoft’s Xbox One, hardware based console will be a thing of past? Do you think the next gen consoles, say the PS5 and Next Xbox will be services rather than actual consoles?

The clients will certainly get thinner to a degree. As discussed above, it’s a case of figuring out which bits of the hardware will get placed where.

I wanted to talk a bit about the differences between PS4 and Xbox One GPU and their ROP counts. Obviously one has more ROP count than the other but do you think these are just numerical numbers and the difference does not matter in practical scenarios?

Data such as this really doesn’t matter as different hardware achieves the same effects in different ways. Coupled with different engines being built to take advantage of different hardware peculiarities, the numbers really only make sense if you want to build graphs of random data and strenuously imply one is better than the other. The obvious caveat here is that both are modified GCN architectures (Liverpool and Durango) so they’re closer to each other and easier to compare than previous generations.

A great example of this was the old PS2: you had effectively 2MB of VRAM left after you stored your frame buffer. The Dreamcast had 8MB total and the Xbox had 64MB shared RAM. I remember at the time everybody going crazy about this trying to demonstrate the inferiority of the PS2 in comparisons. What was hard to explain at the time was the PS2’s DMA system was so fast that you if you were clever enough, you could swap this out 16 times a frame and get an effective 32MB of VRAM.

As a developer yourself, I am sure you are aware about sacrificing frame rate over resolution and vice versa. This seems to be happening a lot this gen. Why is resolution and frame rate connected to each other and why do you think developers are struggling to balance the combination?

Resolution is only connected to frame rate in-so-much-as it’s one of the easiest variables to change when you’re looking to gain performance quickly. It’s also one of the most visible and easy to measure compromises. If you go from 1080×720 to 1080×640 you can reduce the amount of work done by 10%. If your pixels are one of the bottlenecks, that immediately transforms into performance, leaving the rest to a cheap up-scaler.

The life of an engine developer is constantly mired in compromise that the average player will never see. Particles can be rendered at a lower resolution, volumes can be traced with less steps, calculations can be blended over multiple frames and textures can be streamed at a lower resolution. It’s a hard road and making compromises that the player will never see is difficult and rife with potential side-effects that can take days or months to emerge. As such, making these deep changes late in a project is more risky and less likely to be accepted.

Changing resolution is easier with the most visible side-effect of more aliasing. Late in the game, if you’re going to be criticized anyway, frame rate with minimal tearing is king and most would rather take the punches on resolution.